User:Farhan

Edge Computing Architecture and Layers

Introduction

Edge computing is a new paradigm that moves computational processing nearer to where data is generated in an attempt to reduce latency and bandwidth usage. In contrast to conventional cloud-based systems with centralized data centers for processing data, edge computing disperses computational capacity among devices and local nodes in the network at the edge. The architectural change is essential in real-time applications that require stringent high-speed data processing demands like autonomous cars, health monitoring, and industrial automation.

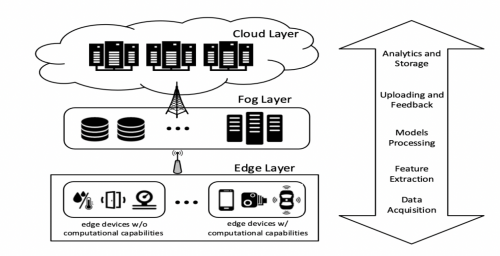

The edge computing infrastructure is composed of multiple layers, with each layer playing its role. At the ground level, there are edge devices such as sensors and actuators that gather data and do the primary processing. Moving upwards, fog computing is an intermediary layer between multiple edge nodes, which consolidates data, pre-processes, and sends it to the cloud. The cloud at the top does the heavy computation and long-term storage of data.

The confluence of IoT, mobile computing, digital twins, and cloud infrastructure creates a robust edge computing architecture. We present in this chapter the basic building blocks and layers of edge computing, such as IoT and digital twins, cloud infrastructure, fog computing, and the edge-cloud continuum.

1.1 IoT, Mobile, and Digital Twins

Internet of Things (IoT)

The Internet of Things (IoT) is networked physical objects that communicate using the internet. Examples are everyday consumer devices like smart thermostats, industrial equipment, wearable health trackers, and components of urban infrastructure. IoT offers greater connectivity and automation via continuous data generation and analysis.

Key Features of IoT:

- Connectivity: Utilizes protocols such as Wi-Fi, Bluetooth, Zigbee, and LoRaWAN to transmit

data

- Data Processing: Uses both cloud and edge computing systems to analyze data collected from

sensors

- Automation: Employs AI-driven or rule-based systems for autonomous decision-making

- Examples: Smart homes, healthcare wearables, industrial IoT (IIoT), smart cities, and connected

vehicles

Mobile Cloud Computing (MCC)

Mobile Cloud Computing (MCC) integrates mobile devices and cloud computing to improve processing capability and storage. The paradigm enables computation-intensive processing to be offloaded from mobile devices to cloud servers, thereby conserving battery life while improving performance.

Benefits of MCC:

- Extended Battery Life: Offloading processing tasks to the cloud reduces local energy

consumption

- Higher Processing Power: Supports complex applications like AI and machine learning

- Scalability: Dynamically scales to accommodate fluctuating user demand

- Real-Time Access: Facilitates access to cloud-hosted applications from anywhere

- Applications: Mobile AI assistants (like Google Assistant), cloud gaming, and AR/VR

applications

Digital Twins

Digital twins are computerized replicas of physical assets, updated regularly with real-time data in order to simulate, predict, and improve performance. Digital twins are being used more in manufacturing, healthcare, and smart city initiatives, where they can provide predictive maintenance and system optimization.

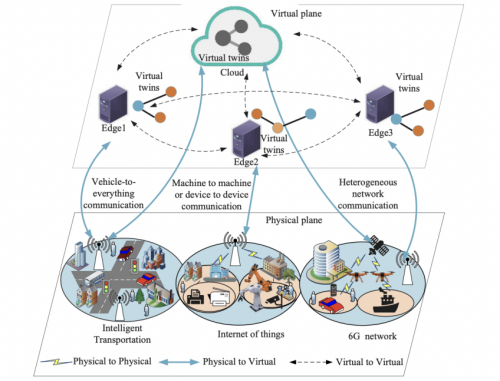

Digital twins connect physical devices with virtual environments. Digital twins function inside edge nodes in edge computing to analyze information locally, limiting the necessity of sending huge volumes of data to the cloud. The arrangement is specifically valuable in applications requiring real-time analytics, for example, industrial automation and medical monitoring.

Diagram 1: Digital Twin Edge Network Architecture

This diagram illustrates a layered architecture where virtual twins communicate with physical IoT devices through edge nodes. It showcases intelligent transportation systems, 6G networks, and IoT applications as part of a hierarchical edge-cloud framework.

1.2 Cloud

Cloud computing is a model of providing ubiquitous, easy, on-demand network access to a shared pool of configurable computer resources such as networks, servers, storage, applications, and services that can be rapidly provisioned and released with minimal management effort. Cloud computing provides an ability to use high-capacity computational resources without owning physical infrastructure or maintaining it.

Characteristics of Cloud Computing:

- On-Demand Self-Service: Users can independently access computing resources as needed

without requiring human intervention from the service provider

- Broad Network Access: Cloud services are accessible over the network and support

heterogeneous client platforms such as mobile phones, laptops, and workstations

- Resource Pooling: The provider's computing resources are pooled to serve multiple consumers

using a multi-tenant model

- Rapid Elasticity: Capabilities can be elastically provisioned and released to scale rapidly

according to demand

- Measured Service: Resource usage is monitored, controlled, and reported, providing

transparency for both the provider and the consumer

Cloud Service Models:

- Infrastructure as a Service (IaaS): Offers fundamental computing resources like virtual

machines, storage, and networks. Examples include AWS EC2 and Google Compute Engine

- Platform as a Service (PaaS): Provides environments to develop, test, and deploy applications

It abstracts the underlying infrastructure, focusing on application development (e.g., AWS Elastic Beanstalk, Heroku)

- Software as a Service (SaaS): Delivers applications over the internet on a subscription basis,

eliminating the need to install or maintain software (e.g., Microsoft 365, Salesforce)

Deployment Models:

- Public Cloud: Operated by third-party providers, offering services over the public internet.

Suitable for low-security needs and high scalability

- Private Cloud: Exclusively used by a single organization, providing enhanced control and

security. Hosted either on-premises or by a third party

- Hybrid Cloud: Combines public and private clouds, enabling data and application sharing

between them

- Multi-Cloud: Utilizes services from multiple cloud providers to avoid vendor lock-in and

increase reliability

Challenges in Cloud Computing:

- Latency Issues: Cloud-based processing can introduce delays, especially in real-time

applications

- Data Privacy Concerns: Transmitting sensitive data to the cloud raises potential security risks

- Bandwidth Costs: Frequent data transfer between devices and the cloud can incur significant

costs

- Downtime and Outages: Service disruptions in cloud infrastructure can affect availability

Cloud-Edge Integration:

Edge computing is an improvement over cloud computing because it computes closer to the source and utilizes the cloud for more in-depth analytics and storage. An example is when in the context of autonomous vehicles, where real-time information (i.e., obstacle detection) is computed locally but machine learning model training data is sent to the cloud.

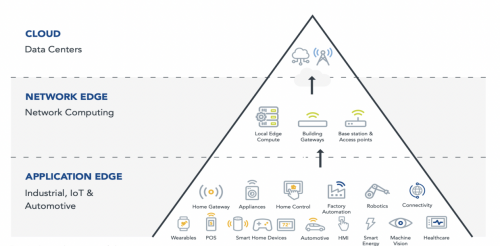

Diagram 2: Edge Computing Architecture

This diagram represents the layered structure of edge computing, highlighting how the cloud layer interacts with network and application edges. The cloud primarily handles heavy computation and data storage, while edge devices focus on local data processing.

1.3 Edge and Fog

Edge Computing:

Edge computing handles data at or close to the place where the data is situated, minimizing the latency in transmitting data to the cloud servers centrally. It is critical for applications that need real-time data processing and response, including autonomous vehicles, industrial automation, and medical monitoring.

- Reduced Latency: Edge computing reduces the latency of transmitting data over long distances

as it processes the data locally.

- Improved Data Privacy: Personal data is processed closer to the source, reducing the risk of

exposure.

- Bandwidth Efficiency: There is only transmission of processed data or key information to the

cloud, which minimizes data traffic.

- High Reliability: Edge devices can operate independently even when the network connectivity

with the cloud is severed.

Applications of Edge Computing:

- 'Autonomous Vehicles: Real-time data processing for navigation and obstacle avoidance

- Smart Cities: Adaptive lighting networks and traffic monitoring

- Healthcare: Real-time remote monitoring equipment to analyze patients' information

- Industrial IoT: Predictive maintenance through sensor data

Fog Computing:

Fog computing is an extension of edge computing in the way that it creates a middle layer between the cloud and the edge devices. Fog computing enables data to be collected, computed, and decision-making performed nearer to the data source but not on the device itself.

Features of Fog Computing:

- Intermediate Processing: Relieves edge devices of some processing tasks prior to reaching the

cloud

- Data Aggregation: It combines data from various sources to eliminate redundancy

- Enhanced Scalability: Enables massive IoT networks by distributing computation tasks

- Real-Time Analytics: Processes real-time data in real time without depending on distant servers

Comparison: Edge vs. Fog:

- Processing Location: Edge directly on devices; Fog on intermediate nodes

- Latency: Edge has ultra-low latency; Fog has low but comparatively higher latency

- Scalability: Fog is more scalable because of the capability of data combining

- Data Aggregation: Edge deals with individual data, while Fog consolidates data from multiple

sources.

Diagram 3: Edge-Fog-Cloud Network Architecture

The diagram shows the interplay between cloud, fog, and edge layers, emphasizing the data flow from localized edge processing through fog nodes to the centralized cloud for deeper analytics.

1.4 Edge-Cloud Continuum

Edge-Cloud Continuum is defined as an adaptive and dynamic computational paradigm that balances edge, fog, and cloud computation workloads. Through this integration of environments, applications can toggle between local and remote processing depending on workload, latency constraints, and network status.

Core Functions:

- Dynamic Workload Distribution: Real-time tasks are processed at the edge, while less critical

operations are pushed to the cloud

- Real-Time Data Processing: Edge devices manage time-sensitive data, like sensor readings,

without sending it to remote servers

- Data Filtering and Aggregation: Fog nodes filter data before forwarding it to the cloud,

minimizing data transfer

- Scalable and Elastic Resource Management: Allocates resources adaptively according to the

computational demand

- Security and Privacy Management: Implements localized data encryption and access control.

- Interoperability: Facilitates smooth interaction between edge, fog, and cloud systems through

intelligent orchestration

Applications:

- Smart Grids: Real-time monitoring and load balancing between local power sources and

centralized control

- Smart Agriculture: Local analysis of environmental data, with broader data analytics performed

in the cloud

- Healthcare Monitoring: Real-time patient monitoring at the edge, with historical analysis in the

cloud

By blending edge and cloud processing, the continuum provides a flexible infrastructure that adapts to real-time requirements while maintaining efficient long-term data management.