Machine Learning at the Edge

Machine Learning at the Edge[edit]

4.1 Overview of ML at the Edge[edit]

Introduction[edit]

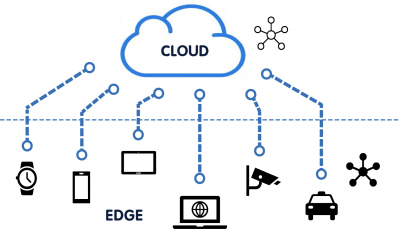

Machine Learning is a branch of Artificial Intelligence that is primarily concerned with the training of algorithms to look at sets of data, analyze patterns, and generate conclusions so that the gathered data can be used to generate results and carry out tasks. As an application, machine learning is especially relevant when considering edge computing [7]. Given the recent prevalence and rise in the field of machine learning, it is inevitable that deployment of machine learning algorithms will play a crucial role in the function of edge computing systems as well. The abundant sensor networks often involved in edge systems provide a means of gathering very large amounts of data. With the help of machine learning, such data can be utilized in very effective ways and automation methods can be employed to improve efficiency. Machine learning on edge devices themselves can help ease the burden on cloud systems and the networks connected to them as well, especially if the model does need such large computational power such as in the case of certain Small Language Models (SLMs). However, the main issue regarding machine learning at the edge lies in the devices, which may have limited computational power, and the environment, which may change dynamically and be effected by network congestion, device outages, or other unexpected events. If these challenges can be overcome, edge systems could play a pivotal role in advancing machine learning and utilize it to maximize efficiency.

Benefits of Machine Learning using Edge Computing[edit]

Data Volumes: Traditional cloud computing architecture may not be able to keep up with the massive volume of data that is often generated by IoT devices and sensors, thus increasing costs as well as pressure and congestion on the networks transmitting data [5]. With edge computing architectures, machine learning can be deployed in a distributed nature, allowing ML models to be deployed and trained across many edge devices. The nature of edge devices sharing computational tasks makes it perfect for taking large datasets and splitting the computational load so that many devices can compute what a single device might not have been able to handle [5]. More than just splitting input data across edge devices, machine learning models can be split up across edge devices as well. This allows for deployment of models that would be otherwise too large to be deployed on a single edge device with limited memory. In such cases, edge nodes are able to collaboratively pass data between them, and the global ML model is later updated based on the workings of each smaller portion [5]. Additionally, the sheer amount of data that processed from edge devices means more training data for machine learning models, which generally increases accuracy, and thus edge computing could be an effective solution to providing lots of good and relevant data for a variety of applications.

Lower Latency: For certain real-time applications, such as Virtual and Augmented Reality(VR/AR) or smart cars, the latency required to transmit data and process it to the cloud may be too high to make these applications efficient and safe. A key benefit of edge computing lies in the reduced latency provided by putting devices closer to the users, and this is applicable to machine learning as well [5].

Enhanced privacy: Processing data locally rather than on the cloud reduces the risk of data theft and enhances privacy. The data does not have to be sent to anyone else, or go over a network to potentially get compromised. This is crucial given the large amounts of data needed for machine learning, and especially if personal data is needed for a personal application, many users would prefer the privacy done by having it processed locally [5].

Challenges of Machine Learning Using Edge Computing[edit]

Potentially Low Computational Power: Many edge devices lack the computational power needed for deep learning applications, especially given the large amount of data and complex operations that may have to take place [1].

Energy Management: Given that edge devices may consist of sensors, or other devices that are meant to have low energy consumption, the tasks associated with machine learning could quickly drain their power, even if they have sufficient power to run the workloads [6].

New Attack Surfaces: With more devices comes the increased potential for malicious hackers to steal data or compromise devices, despite the enhanced privacy. Encryption, access controls, and other methodologies must be employed to mitigate the potential for new attack surfaces to be exploited [6].

Applications for ML at the Edge[edit]

The utilization of data to make conjectures about what is going on in the environment and how to respond has a variety of use cases that can greatly benefit people, cities, and the environment. By leveraging and monitoring a constant stream of data and training machine learning models to detect or even respond to different events, there can be many practical applications for such systems. These would rely on the combination of edge devices and machine learning to better enhance experience for users and detect events of interest.

Self-Driving Cars: Imitation learning can be leveraged to better understand and emulate human driving. The low latency that can be provided by edge computing is especially useful for the quick decision making needed by these systems [7].

Smart Home Devices: Understanding user habits by leveraging the available data for them can make smart homes more convenient for users. The increased privacy that can come with edge computing, along with a few extra cybersecurity measures, can ensure the personal data that may be used for training is not compromised.

Environmental and Industrial Monitoring: Sensors deployed in environments and industrial settings could be trained to recognize when there are anomalies or undesirable behavior, thus ensuring a quick response and active information sending [7].

Smart Cities: Similar to above, the data collected by sensors in cities can leverage machine learning to help in crime and emergency detection, traffic management, or energy management[7].

4.2 ML Training at the Edge[edit]

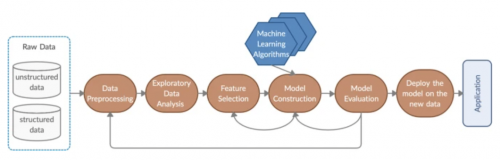

Machine Learning (ML) training at the edge is basically the process of developing, updating, or fine-tuning ML models directly on edge devices like on smartphones, IoT sensors, wearables, and other embedded systems instead of only depending on centralized cloud infrastructure. This approach is becoming a lot more important as the demand for real-time, personalized AI applications continues to grow. By being able to train models closer to where the data is generated, edge-based ML enables faster responses, helps reduce latency, and enhances user privacy by minimizing the need to transmit sensitive data to the cloud. It’s also especially useful in scenarios where devices operate in environments with limited or unreliable network connectivity, allowing them to function more efficiently.

Benefits:[edit]

One significant advantage of training ML models directly on edge devices is reduced latency. By processing data locally, devices can make immediate decisions without the delays caused by transmitting data back and forth to cloud servers. This immediate responsiveness is extremely important for applications like real time health monitoring, autonomous driving, and industrial automation.

Additionally, training machine learning models at the edge significantly enhances user privacy. Since sensitive data can be processed and stored directly on the user's device rather than being sent to centralized cloud servers, the risk of data breaches or unauthorized access during transmission is reduced by a lot. This local data handling is able to prevent exposure of personal or confidential information, providing users greater control over their data. Edge-based training naturally aligns with privacy regulations such as the General Data Protection Regulation (GDPR), which emphasizes strict data security, transparency, and explicit user consent. By keeping personal data localized, edge training not only improves security but also helps organizations easily comply with privacy laws, protecting users’ rights and maintaining trust.

Efficiency and resilience are important benefits of edge training. By training machine learning models directly on edge devices, these devices become capable of processing data locally without relying on constant internet connectivity. This local processing allows edge devices to continue operating effectively even in environments where network connections are weak, unstable, or completely unavailable. Because they are not fully dependent on cloud infrastructure, edge devices can quickly adapt to changes, respond in real-time, and update their ML models based on immediate local data. As a result, edge training ensures reliable performance and uninterrupted operation, making it particularly valuable for remote locations, emergency scenarios, and harsh environments where cloud-based solutions might fail or become unreliable.

Examples:

A smart thermostat in a home can learn a user’s preferences for temperature and adjust automatically based on real-time inputs, like time of day or weather conditions. Similarly, a fitness tracker can track user activity patterns and adapt its recommendations for workouts or rest periods based on how the user is performing each day. These devices don’t need to rely on cloud servers to update or personalize their behavior — they can do it instantly on the device, which makes them more responsive and efficient.

In smart agriculture, edge computing is used to enhance crop monitoring and optimize farming practices. Devices like soil sensors, drones, and automated irrigation systems are equipped with sensors that collect data on soil moisture, temperature, and crop health. Edge devices process this data locally, enabling real-time decisions for tasks like irrigation, fertilization, and pest control.

In smart retail, edge computing is used to improve inventory management and customer experience. Retailers use smart shelves, RFID tags, and in-store cameras equipped with sensors to track inventory and monitor customer behavior. By processing this data locally on edge devices, retailers can manage stock levels, detect theft, and optimize store layouts in real-time. RFID tags placed on products can detect when an item is removed from the shelf. Using edge processing, the system can immediately update the inventory count and trigger a restocking request if an item’s stock is low.

Research Papers:

An important contribution to the understanding of machine learning (ML) training at the edge is the research paper "Making Distributed Edge Machine Learning for Resource-Constrained Communities and Environments Smarter: Contexts and Challenges" by Truong et al. (2023). This paper focuses on training ML models directly on edge devices in communities and environments facing limitations, such as unstable network connections, limited computational resources, and scarce technical expertise. The authors emphasize the necessity of developing context-aware ML training methods specifically tailored to these environments. Traditional centralized ML training methods often fail to operate effectively in such constrained settings, highlighting the need for decentralized, localized solutions. Truong et al. explore various challenges, including managing data efficiently, deploying suitable software frameworks, and designing intelligent runtime strategies that allow edge devices to train models effectively despite limited resources. Their work points out significant research directions, advocating for more adaptable and sustainable ML training solutions that genuinely reflect the technological and social contexts of resource-limited environments.

Tools and Frameworks:[edit]

Frameworks like TensorFlow Lite, PyTorch Mobile, and Edge Impulse are designed to support edge-based model training and inference. These tools allow developers to build and fine-tune models specifically for deployment on low-power devices.

Technical Challenges:[edit]

Despite its advantages, ML training at the edge presents challenges, including limited processing power, memory constraints, and energy efficiency. Edge devices often lack the computational resources of cloud servers, requiring lightweight models, optimized algorithms, and energy-efficient hardware.

Real World Applications: A well known example is Apple’s use of on-device training for personalized voice recognition with Siri. Instead of uploading user voice data to the cloud, Apple uses local training to improve accuracy over time while maintaining user privacy.

Model Compression Techniques[edit]

Despite the challenges, of ML at the edge, there are a variety of methods that can be used to provide a more efficient means of training, and making the heavy workloads compatible with even the limited computing power of certain edge devices.

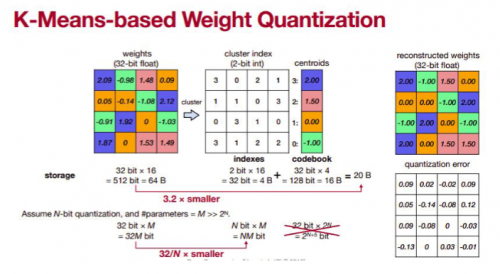

Quantization: Quantization is a method that involves reducing the precision of numbers, and thus easing the burden of computational power as well as memory management on the edge devices. There are multiple forms of quantization, but each one essentially sacrifices some precision - enough so that accuracy is mostly maintained but the numbers are easier to handle. For example, converting from floating point to integer datatypes means significantly less memory is used, and the differences for some models in the precision may be negligible. Another example is K-means based Weight Quantization, which involves creating a matrix and grouping similar numbers together with centroids. An example is shown below:

In recent work, Quantized Neural Networks (QNNs) have demonstrated that even extreme quantization—such as using just 1-bit values for weights and activations—can retain near state-of-the-art accuracy across vision and language tasks [12]. This type of quantization drastically reduces memory access requirements and replaces expensive arithmetic operations with fast, low-power bitwise operations like XNOR and popcount. These benefits are especially important for edge deployment, where energy efficiency is critical. In addition to model compression, Hubara et al. also show that quantized gradients—using as little as 6 bits—can be employed during training with minimal performance loss, further enabling efficient on-device learning [12]. QNNs have achieved strong results even on demanding benchmarks like ImageNet, while offering significant speedups and memory savings, making them one of the most practical solutions for edge AI deployment [12].

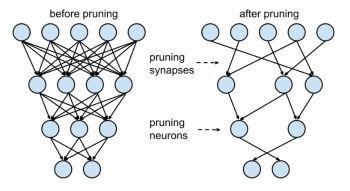

Pruning:

Pruning is an optimization technique that systematically removes low-salience parameters—such as weakly contributing weights or redundant hypothesis paths—from a machine learning model or decoding algorithm to reduce computational overhead. In the context of edge computing, where resources like memory bandwidth, power, and processing time are limited, pruning enables the deployment of performant models within strict efficiency constraints.

In statistical machine translation (SMT) systems, pruning is particularly critical during the decoding phase, where the search space of possible translations grows exponentially with sentence length. Techniques such as histogram pruning and threshold pruning are employed to manage this complexity. Histogram pruning restricts the number of candidate hypotheses retained in a decoding stack to a fixed size 𝑛, discarding the remainder. Threshold pruning eliminates hypotheses whose scores fall below a proportion 𝛼 of the best-scoring candidate, effectively filtering out weak candidates early.

The paper by Banik et al. introduces a machine learning-based dynamic pruning framework that adaptively tunes pruning parameters—namely stack size and beam threshold—based on structural features of the input text, such as sentence length, syntactic complexity, and the distribution of stop words. Rather than relying on static hyperparameters, this method uses a classifier (CN2 algorithm) trained on performance data to predict optimal pruning configurations at runtime. Experimental results showed consistent reductions in decoding latency (up to 90%) while maintaining or improving translation quality, as measured by BLEU scores [13].

This adaptive pruning paradigm is highly relevant to edge inference pipelines, where models must maintain a balance between latency and predictive accuracy. By intelligently limiting the hypothesis space and focusing computational resources on high-probability paths, pruning supports real-time, resource-efficient processing in edge NLP and embedded translation systems.

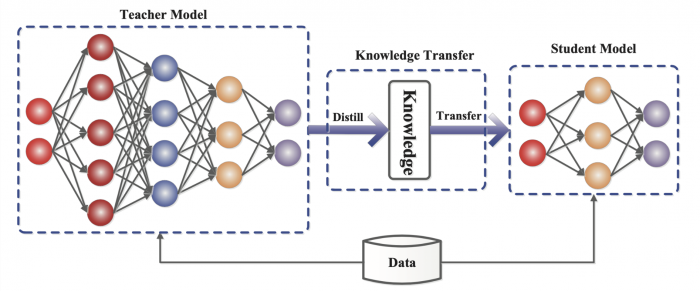

Distillation:

Distillation is a key strategy for reducing model complexity in edge computing environments. Instead of training a compact student model on hard labels—discrete class labels like 0, 1, or 2—it is trained on the soft outputs of a larger teacher model. These soft labels represent probability distributions over all classes, offering more nuanced supervision. For instance, rather than telling the student the input belongs strictly to class 3, a teacher might output “70% class 3, 25% class 2, 5% class 1.” This richer feedback helps the student model capture subtle relationships between classes that hard labels miss. Beyond reducing computational demands, distillation enhances generalization by conveying more informative training signals. It also benefits from favorable data geometry—when class distributions are well-separated and aligned—and exhibits strong monotonicity, meaning the student model reliably improves as more data becomes available [11]. These properties make it exceptionally suited for edge devices where training data may be limited, but efficient inference is crucial.

In most cases, knowledge distillation in edge environments involves a large, high-capacity model trained in the cloud acting as the teacher, while the smaller, lightweight student model is deployed on edge devices. A less common—but emerging—practice is edge-to-edge distillation, where a more powerful edge node or edge server functions as the teacher for other nearby edge devices. This setup is especially valuable in federated, collaborative, or hierarchical edge networks, where cloud connectivity may be limited or privacy concerns necessitate local training. Distillation can also be combined with techniques such as quantization or pruning to further optimize model performance under hardware constraints. An example is shown below:

| Technique | Description | Primary Benefit | Trade-offs | Ideal Use Case |

|---|---|---|---|---|

| Pruning | Removes unnecessary weights or neurons from a neural network. | Reduces model size and computation. | May require retraining or fine-tuning to preserve accuracy. | Useful for deploying models on devices with strict memory and compute constraints. |

| Quantization | Converts high-precision values (e.g., 32-bit float) to lower precision (e.g., 8-bit integer or binary). | Lowers memory usage and accelerates inference. | Risk of precision loss, especially in very small or sensitive models. | Ideal when real-time inference and power efficiency are essential. |

| Distillation | Trains a smaller model (student) using the output probabilities of a larger, more complex teacher model. | Preserves performance while reducing model complexity. | Requires access to a trained teacher model and additional training data. | Effective when deploying accurate, lightweight models under data or resource constraints. |

Usage and Applications of AI Agents[edit]

As artificial intelligence and machine learning technologies continue to mature, they pave the way for the development of intelligent AI agents capable of autonomous, context-aware behavior, with the goal of efficiently performing tasks specified by users. These agents combine perception, reasoning, and decision-making to execute tasks with minimal human intervention. When deployed on edge devices, AI agents can operate with low latency, preserve user privacy, and adapt to local data—making them ideal for real-time, personalized applications in homes, vehicles, factories, and beyond.

To function effectively, an agent must first perceive its environment and understand the task—often defined by the user. Then, it must reason about the optimal steps to accomplish that task, and finally, it must act on those decisions. These three components—perception, reasoning, and action—are essential to the agent’s ability to operate accurately and autonomously in dynamic environments.

Reasoning: The agent must be able to think sequentially, and decompose its specified tasks into a sequence of specific steps in order to accomplish its goal. It must also have some memory storage in order to remember what it has done, as well as the results of its sequence of actions in order to learn for future steps.

Autonomy: The agent must choose from the availability of possible steps, and operate based on its reasoning without step-by-step instructions from the user.

Tools: These tasks, however, are impossible to accomplish without the correct tools. Even if an AI agent understands how to go about carrying a task for optimal results, it must have the actual means to do it. This can include the ability to use and interact with APIs, interpret code, and access certain databases.

Utilizing AI agents on edge devices can be tricky due to the computational and reasoning power needed. However, there are methods to accomplish this such as SLMs which query LLMs as needed (discussed later), or utilizing more powerful edge devices to carry out tasks. However, utilizing edge devices can be paramount if latency is a major issue, or if the agent is exposed to sensitive user data. Additionally, by using edge devices specific to a user, it may be able to better learn a user's patterns and preferences and react accordingly to provide the best possible outcome for that user.

4.3 ML Model Optimization at the Edge[edit]

The Need for Model Optimization at the Edge[edit]

Given the constrained resources and the inherently dynamic environments in which edge devices must operate, model optimization is a crucial part of machine learning in edge computing. The current most widely used methodology consists of simply specifying an exceptionally large set of parameters, and giving it to the model to train on. This can be feasible when hardware is very advanced and powerful, and is necessary for systems such as Large Language Models (LLMs). However, this is no longer viable when dealing with the devices and environments at the edge. It is crucial to identify the best parameters and training methodology so as to minimize the amount of work done by these devices, while compromising as little as possible on the accuracy of the models. There are multiple ways to this, and they include either optimization or augmentation of the dataset itself, or optimization of the partition of work among the edge devices.

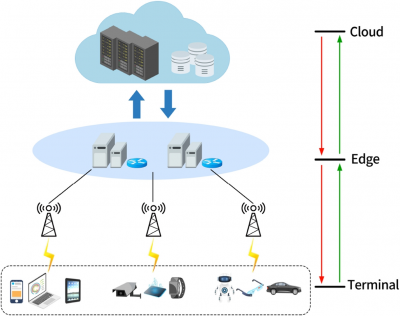

Edge and Cloud Collaboration[edit]

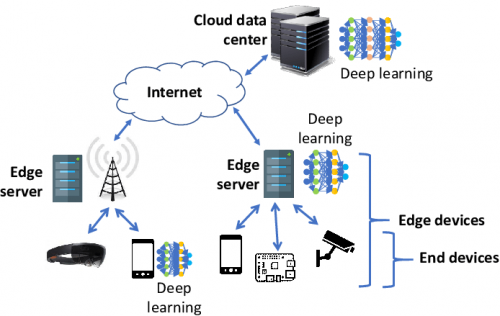

One methodology that is often used involves collaboration between both Edge and Cloud Devices. The cloud has the ability to process workloads that may require much more resources and cannot be done on edge devices. On the other hand, edge devices, which can store and process data locally, may have lower latency and more privacy. Given the advantages of each of these, many have proposed that the best way to handle machine learning is through a combination of edge and cloud computing.

The primary issue facing this computing paradigm, however, is the problem of optimally selecting which workloads should be done on the cloud and which should be done on the edge. This is a crucial problem to solve, as the correct partition of workloads is the best way to ensure that the respective benefits of the devices can be leveraged. A common way to do this, is to run certain computing tasks on the necessary devices and determine the length of time and resources that it takes. An example of this is the profiling step done in EdgeShard [1] and Neurosurgeon [4]. Other frameworks implement similar steps, where the capabilities of different devices are tested in order to allocate their workloads and determine the limit at which they can provide efficient functionality. If the workload is beyond the limits of the devices, it can be sent to the cloud for processing

The key advantage of this is that it is able to utilize the resources of the edge devices as necessary, allowing increased data privacy and lower latency. Since workloads are only processed in the cloud as needed, this will reduce the overall amount of time needed for processing because data is not constantly sent back and forth. It also allows for much less network congestion, which is crucial for many applications.

Optimizing Workload Partitioning[edit]

The key idea for much of the optimization done in machine learning on edge systems involves fully utilizing the heterogenous devices that are often contained in these systems. As such, it is important to understand the capabilities of each device so as to fully utilize its advantages. Devices can very greatly from smartphones with more powerful computational abilities to raspberry pis to sensors. More difficult tasks are offloaded to the powerful devices, while simpler tasks, or models that have been somewhat pretrained can be sent to the smaller devices. In some cases, as in Mobile-Edge [2], the task may be dropped altogether if the resources are deemed insufficient. In this way, exceptionally difficult tasks do not block tasks that have the ability to be executed and therefore the system can continue working.

Dynamic Models[edit]

Given the dynamic nature of the environments that edge devices must function, as well as the heterogeneity of the devices themselves, a dynamic model of machine learning is often employed. Such models must keep track of the current available resources including computation usage and power, as well as network traffic. These may change very often depending on the workloads and devices in the system. As such, training models to continuously monitor and dynamically distribute the workloads is a very important part of optimization. Simply offloading larger tasks to more powerful devices may be obsolete if the devices has all of its computing resources or network capabilities being used up by another workload.

The way this is commonly done is by using the profiling step described above as a baseline. Then, a machine learning model utilizes the data to estimate the performance of devices and/or layers. During runtime, a similar process is employed which may update the data used and help the model refine its predictions. Network traffic is also taken into account at this stage in order to preserve the edge computing benefit of providing lower latency. Using all of this data and updates at runtime, the partitioning model is able to dynamically distribute workloads at runtime in order to optimize the workflow and ensure each device is utilizing its resources in the most efficient manner. Two very good examples of how such a system is specifically deployed are the Neurosurgeon and EdgeShard systems, shown above.

Horizontal and Vertical Partitioning[edit]

There are 2 major ways that these models split the workloads in order to optimize the machine learning: Horizontal and vertical partitioning [3]. Given a set of layers that ranges from the cloud to edge, vertical partitioning involves splitting up the workload between the layers. For example, if a large amount of computational resources is deemed necessary, this task may go to the cloud to be completed and preprocessed. One the other hand, if a small amount of computational power is required, this type of work can go to edge devices. Such partitioning also depends on the confidence and accuracy level of the given learning. If the accuracy is completed on an edge device and found to be very low, it can be sent to the cloud; on the other hand if the accuracy is already fairly high and the learning model needs smaller work to reach the threshold deemed acceptable, it may be sent to edge devices to free up network traffic on the cloud and reduce latency [3].

The second model of partitioning is called horizontal partitioning. This involves splitting among the devices within a certain layer rather than among the layers themselves. This is similar to what has been described in previous sections, as it allows a means for fully utilizing the heterogenous abilities that are found in edge devices. Similar functionality and determination to what is found in horizontal partitioning is done, but all of the devices that the workload is split across function on the same layer [3]. To fully optimize a machine learning model, both horizontal and vertical partitioning must be used.

Distributed Learning[edit]

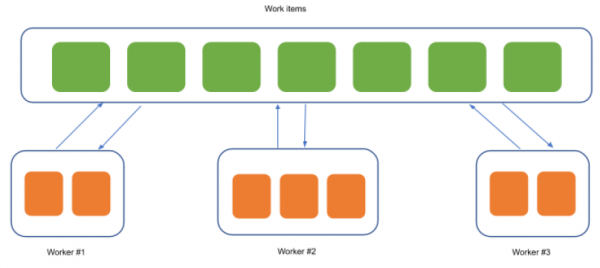

Distributed learning is a process in which instead of giving all of the data to the nodes, each node takes in and processes only a subset of the overall data, then sends its results to a central server which contains the main model. These periodic updates are done by each node, and by only processing part of the data, it is much more easy for edge devices to handle these workloads. It can also reduce the time and computational burden on the cloud and network because it is not only the central server performing all of the computations. One popular method of accomplishing this is by using parallel gradient descent. Gradient descent by itself is a very useful means of training a model, and parallel gradient descent uses a similar process but instead of a single operation, it aggregates the gradient descent calculated by each node in order to update the central model. This efficiently utilizes each node and makes sure that the data that is used to update the model does not exceed the memory constraints of the edge devices being used as nodes.

Asynchronous Scheduling: One important aspect of distributed learning is the means by which the updates are given to the model. Each node may have different amounts of data, memory, and computational power, and therefore the time it takes to process and update will not be the same. Synchronous algorithms make sure that each node finishes its respective processing, and then all of the calculations from all nodes are sent to update the central model. Although, this can make the updating easier, any nodes that lag behind the others will greatly reduce the speed at which the model is trained; this also leaves nodes idle while others are still processing and can be highly inefficient. To optimize this, after each node has finished its respective processing, it sends everything to the main server for an update rather than waiting for all the others to finish. However, this requires some more communication, as after each update, every node must get the updated model for the central server; this can be challenging to repeatedly do, but the efficiency that is gained is often worth it.

Federated learning: Federated learning is another means by which the data as well as computational burden is distributed among a set of nodes, thus reducing network traffic and strain on the central servers. It is similar to distributed learning, but the key difference lies in the data partitioning. Unlike distributed learning, data used in federated learning is not shared with the central servers. Instead, only local data from each of the nodes is used to train the model. Then, the only part shared with the central model is the updated parameters. A key aspect of this is that federated learning provides a greater amount of data privacy, which is crucial for certain applications dealing with sensitive data. Therefore, it is especially useful to utilize edge devices to perform federated learning. Federated learning is discussed in detail in chapter 5 of this wiki.

Transfer Learning[edit]

Transfer learning is a method of machine learning in which a pretrained model is sent to the different nodes for further processing and fine-tuning. The initial training of the model may involve a very large amount of data and could place a major burden on the device that must execute it, which may not be feasible for edge devices. Therefore, the bulk of the model training is done by a more powerful machine, such as the cloud, and then the pretrained model is sent to the edge device. This can be useful, as it reduces the computational burden on the edge device, and allows it to fine-tune the model using the data it collects without having to completely train the whole system. One form of this is knowledge distillation, in which a smaller model can be trained to mimic that of a larger model. This may often be the case when edge and cloud systems are used in a combined way.

Methods of Data Optimization[edit]

Data Selection: Certain types of data may be more useful than others, and therefore the ones that most affect the accuracy of a model can be offloaded to edge devices. This decreases the workload on them because less data must be processed, while also conserving the accuracy of the model as much as possible. Some larger data may not be needed, such as only putting Small Language Models on edge devices that can handle simple commands and prompts, rather than having to offload an entire LLM onto the device, which may overload its computational abilities and not provide enough use.

Data Compression: Data can be compressed to a smaller form in order to fit the constraints of edge devices. This is especially true given their limited memory, and also makes the workload smaller. Quantization, discussed previously, is a prevalent example of this.

Container Workloads: Container workloads can be very useful, as they provide all resources and important data the device needs for processing the work. By examining the computational abilities of the device, different sized workloads can be allocated as deemed necessary to maximize the efficiency of the training.

Batch size tuning: The batch size used by a certain model is very important when considering the memory constraints of edge devices. Batch sizes that are smaller allow for a quicker and more efficient means of training models, and are less likely to lead to bottlenecks in memory. This is related to partitioning because the computing and memory capacity of the devices available are very important factors to consider.

Utilizing Small Language Models (SLMs)[edit]

Large Language Models (LLMs) have become a prevalent system recently and are able to do and help with a variety of tasks. However, running and training an LLM requires a significant amount of computational resources which is not feasible when working with edge devices. Most modern LLMs are cloud based, but this may lead to high latency and increased network traffic, especially when working with a large subsystem of nodes.

One way that a similar system can be achieved on edge devices is by using SLMs. These are not as accurate and do not have the vast knowledge of LLMs or the amount of data they are trained on, but for the purposes of basic applications and edge devices, they can be sufficient to accomplish many tasks. They are also often fine-tuned and trained to accomplish the specific tasks which they are deployed for and are much faster and resource-efficient than LLMs. They can also provide much more privacy because they are able to be run on local devices without sharing user data to the cloud. This can be useful for a wide variety of edge applications. If needed and privacy constraints permit, they can query and LLM as needed for more complicated tasks. This means that not every prompt leads to a query, and thus network traffic, privacy, and latency constraints are still preserved.

Synthesis[edit]

In summary, edge-oriented machine learning optimization requires an integrated approach that combines model-level compression with system-level orchestration. Techniques such as quantization, structured and unstructured pruning, and knowledge distillation reduce the computational footprint and memory requirements of deep learning models, enabling deployment on resource-constrained devices without substantial loss in inference accuracy. Concurrently, dynamic workload partitioning, heterogeneity-aware scheduling, and adaptive runtime profiling allow the system to allocate tasks across edge and cloud tiers based on real-time availability of compute, bandwidth, and energy resources. This joint optimization across model architecture and execution environment is essential to meet the latency, privacy, and resilience demands of edge AI deployments.