Edge Computing Products and Frameworks

Edge Computing Products and Frameworks

3.1 Industry Products: AWS as an Example

From the previous chapters, it has probably become pretty evident that edge computing will play a key role in applications that are:

- Latency critical

- Require massive amounts of data that need to be transferred, which is not cost-effective

- Where Internet access is not reliable or not possible

Edge computing has already proliferated in several industries, and companies like Amazon (AWS), Microsoft (Azure), Google (Distributed Cloud Edge)], and Nvidia (EGX Edge Computing Platform) have already implemented edge solutions to enable diverse industries to leverage this form of computing for their operational needs. Since this chapter could be an entire book of its own if we go into the details of how each of their respective edge offerings work, for the purposes of brevity, we will focus on Amazon Web Service's edge technology offerings. The rest of this section will describe the edge products offered by Amazon, and present an example of how this technology is being leveraged by one of their customers.

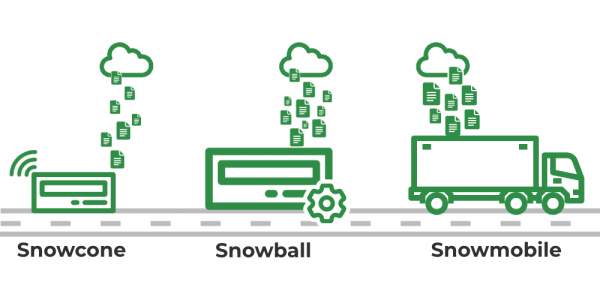

Snowball Family

This product line provides customers with the ability to store and move massive amounts of data (up to exabytes) in environments where Internet connectivity is limited, or may be too expensive to use Internet bandwidth, or the Internet bandwidth is too slow. This service also allows customers to move their data to the cloud from physical data centers. In addition, it allows customers to perform compute on these devices (like a mobile data center). There are three main device types:

- Snowcone: A small and rugged edge computing device for environments with limited power and space.

- Snowball Edge: A larger Snowcone for transferring up to 80 terabytes, and can come equipped with GPUs for ML and video analysis.

- Snowmobile: A shipping container-sized device for extremely large-scale data transfers (100 petabytes at once), typically used when moving entire data centers to AWS.

Novetta, an advanced analytics company for government entities such as defense and intelligence, etc., uses Snowball Edge with Amazon's EC2 and onboard storage to aid in disaster response efforts in the field. They utilize it to post-process video surveillance feeds and track the location of their assets that are critical to disaster response efforts. It helps them operate in an environment where there is poor Internet connection. Since the government sets a high bar for security, it is one of the few products that is certified for use by the government due to its rugged design. They are able to deploy ML models trained in Amazon's SageMaker in the Snowball Edge for no-lag object detection.

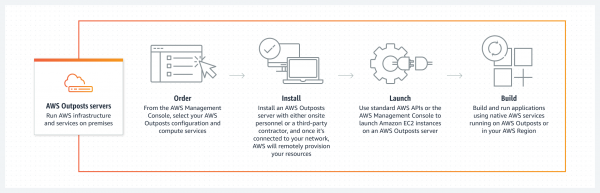

Outpost Family

This product allows their customers to extend AWS functionality to on-premise servers, hence providing a hybrid cloud architecture. This is useful in applications where a customer would want to use the AWS infrastructure, but not on the cloud either due to data privacy or latency reasons, or would like to mainly run services on local servers and scale them to the cloud when resource utilization increases.

Inmarsat, a mobile satellite communications provider, transitioned their IT operations to the AWS stack. However, during the transition, they found that some of their applications do not conform to the cloud-native infrastructure. For example, some workloads were facing 20-30ms latency, which was causing downstream performance issues. For them, Outposts was a perfect solution since they could deploy them on-premise where their data centers were located, which provided them with low-latency data access. This also gave them the additional benefit of managing their outpost systems with the same management interface used for AWS cloud, and also providing them with the ability to failover from an edge location to the cloud for high-availability applications.

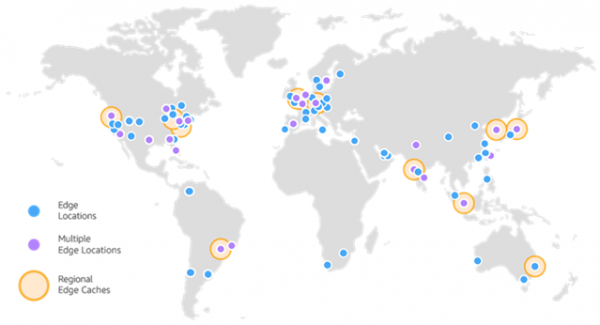

CloudFront

This service is a global content delivery network (CDN) that enables the delivery of data, videos, applications, and other assets with low latency and high transfer speeds. This is done by distributing the content to a network of edge locations around the world, so that the data is hosted closer to the users. It also integrates seamlessly with other AWS services such as S3, EC2, and Lambda. The customers also use its detailed metrics and logs via CloudWatch, which allows them to run analytics and generate detailed reports.

Kaltura, a video experience provider which has a video platform, video player, and other solutions, utilizes Amazon's CloudFront to serve video content to its users from CDNs (edge servers) that are closest to the end user. In addition, they use data analytics to develop benchmarks and best practices. They also use the AWS IoT Services platform to perform software updates on the set-top boxes, send push notifications, and schedule jobs on the devices.

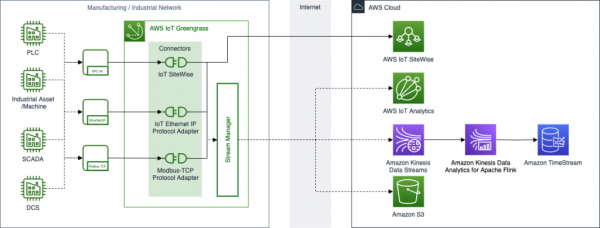

AWS IoT

This platform allows users to connect multiple devices to the cloud to control and manage them, along with aggregating the analytics for these devices. AWS Greengrass extends AWS services to edge devices such that they can still locally process the data being gathered using AWS Lambda functions, and use the cloud functionality for management, storage, and analytics. This allows you to process data locally, reducing bandwidth usage, and works well in low-latency applications. It allows the devices to communicate with each other securely, even when the devices are not connected to the Internet, and syncs the data with the cloud when Internet connection is restored.

Novetta, the company that was also using Snowball Edge, also uses AWS IoT Greengrass by embedding them into their own sensor suite. This way, they can leverage the strengths of Greengrass, which is to locally manage the sensors, enable messaging, and also deploy their trained ML models on the sensors. Since the AWS Lambda functions are serverless, they can be instantiated whenever inference needs to be run on these models, which can be triggered by local events or messages from the cloud, enabling real-time responses. Additional Services The services listed above are a very small subset of all the edge services provided by Amazon Web Services. Some of the ones not explicitly mentioned above are:

- AWS Wavelength: This is designed to deliver applications for mobile devices that require ultra-low latency. This is delivered using 5G, and allows telecommunication providers to leverage the compute in the Wavelength devices (say for AR/VR, autonomous driving, live media streaming, etc.).

- AWS RoboMaker: This allows developers to build, test, and simulate robotic applications on the cloud to help accelerate robotics development. It integrates with Amazon SageMaker, AWS IoT, and AWS Lambda for advanced ML capabilities, cloud storage, and real-time data processing. You can virtually test robotic applications and deploy them on physical devices at scale. This comes with fleet management capabilities, and analytics using CloudWatch.

The main takeaway from this section would be to realize that edge solutions enable users to blur the boundaries between user devices and the cloud by extending the cloud to edge devices. Furthermore, developers prefer to have the flexibility to work in different layers (cloud, edge, or end device) without the hassle of figuring out how to integrate the disparate devices. The AWS solutions have a very strongly integrated ecosystem of solutions which build on top of each other and enable developers to extend AWS functionality to external end devices, which enables developers to successfully tailor AWS Edge for their industry and use cases.

3.2 Open Source Frameworks

Open source software provides transparent, publicly-available code with flexible licensing that allows anyone to use, modify, or build upon it. While quality varies across open source projects, the best ones offer strong security, reliability, efficiency, and ready-to-use tools for for developers out of the box. For these reasons open source is a great option for reducing costs associated with spinning up edge computing applications[13].

In edge computing — where data processing happens near where data is created (like IoT devices or local servers) rather than in centralized cloud data centers — open source plays a vital role. The distributed nature of edge computing works well with open source's collaborative development model. Open source frameworks provide standardized yet adaptable platforms that work across different hardware environments, from small microcontrollers to powerful edge servers. This flexibility is crucial in edge computing where available resources vary widely and proprietary solutions might impose unwanted limitations.

Notable frameworks with useful features include EdgeX Foundry, KubeEdge, and Akraino. Open source in edge computing includes both software implementations and standards that operate at different levels. For example, EdgeX focuses on practical software implementation while Akraino defines architectural blueprints for edge infrastructure. This two-pronged approach addresses the needs of diverse edge environments that require both standardized interfaces and flexible implementions.

Open source edge computing frameworks generally fall into two categories:

- Architecture and Standards Frameworks: Define blueprints, specifications, and reference architectures ensuring compatibility and standardization across edge deployments, focusing on the "what" and "why."

- Implementation Frameworks: Provide actual software, tools, and code that developers can deploy directly in edge environments focusing on the "how."

This approach addresses the needs of various edge environments that require both standardized interfaces and flexible implementations. While specific projects may change or become outdated, the architectural approaches and implementation patterns they represent will likely continue to be relevent.

General Open Source Advantages

- Transparency, Security and Reliability: Community oversight helps find and fix security vulnerabilities faster than in closed-source alternatives. However, this transparency can also reveal potential weaknesses highlighting why good architectural design matters more than "security through obscurity."

- Cost and Time Efficiencies: Developers can focus on writing application code instead of building infrastructure components from scratch. This approach saves considerable time and money by allowing quick configuration of existing components and eliminating licensing fees.

- Community Collaboration: The collaborative nature of open source creates a poweful problem-solving ecosystem where researchers, hobbyists, and companies all contribute to improving the code, often producing innovative solutions that might not emerge in more uniform development environments.

Edge-Specific Benefits

- Interoperability: Edge environments typically contain a mix of different computing resources Open source projects excel by supporting many device types and allowing developers to add support for new hardware, continuously expanding compatibility.

- Longevity: Open source reduces the risk of being locked into a single vendor's solution. Even if projects are abandoned by their original developers, the code remains available for continued use and potential revival providing confidence for long-term deployments in edge environments.

- Accelerated Evolution: The open source model's emphasis on contribution helps speed up development in the rapidly changing field of edge computing, allowing practitioners to collectively address new challanges as they emerge.

Drawbacks of Open Source Systems

- Legal and Licensing Complexities: Organizations may struggle to maintain compliance with licensing requirements and risk accidentally publishing proprietary code when integrating with open source components.

- Limited Driver Support: Many edge devices require proprietary drivers that open source projects cannot include due to licensing restrictions, potentially forcing companies toward proprietary solutions despite preferences otherwize.

- Maintenance Variability: Support quality varies dramatically between projects, with some receiving few updates. Unlike commercial products, support isn't guaranteed unless paid services are arranged, and project prioritys may not align with organizational needs.

Open source offers significant advantages for edge computing but isn't always the best choice. Decision-makers must carefully evaluate options against their specific use cases, weighing benefits and drawbacks. This requires understanding available options, as open source projects vary widely in design philosophy and quality. For anyone implementing edge computing applications, knowledge of major frameworks and standards is esential.

By carefully evaluating these factors, organizations can select frameworks that balance standardization and implementation support for their specific edge computing needs. There is no one-size-fits-all solution — organizations should thoroughly define their requirements before evaluating frameworks.

This section will focus on three main frameworks: Akraino, KubeEdge, and EdgeX Foundry, but there are many open source projects, including:

- Eclipse ioFog[1]: Offers lightweight edge computing with strong container orchestration for resource-constrained environments. Well-suited for distributed edge deployments where central management must coordinate numerous nodes across different locations.

- Apache NiFi[2]: Though not exclusively for edge computing, provides powerful tools for routing, transforming, and processing data streams at the edge. It's visual interface enables rapid development of data pipelines by developers with varying expertise levels.

- Microsoft Azure IoT Edge[3]: A hybrid approach with open-source edge runtime components and proprietary cloud management.

- OpenYurt[4]: Extends Kubernetes to edge scenarios with focus on managing nodes with unstable network connections.

- Eclipse Kura[5]: Java-based framework for IoT gateways with access to low-level hardware interfaces.

- Baetyl (OpenEdge)[6]: Offers seperate frameworks for cloud and edge components with modular implementation.

- StarlingX[7]: Container-based infrastructure optimized for edge deployments addressing unique requirements like fault management and high availability.

EdgeX Foundry

EdgeX Foundry is a comprehensive implementation framework maintained by the Linux Foundation[8]. Originally developed as Dell's "Project Fuse" in 2015 for IoT gateway computation, it became an open source project in 2017, establishing itself as an industrial-grade edge computing solution comparable to Cloud Foundry. It is considered a powerful industry tool for handling heterogeneous edge computing environments[12].

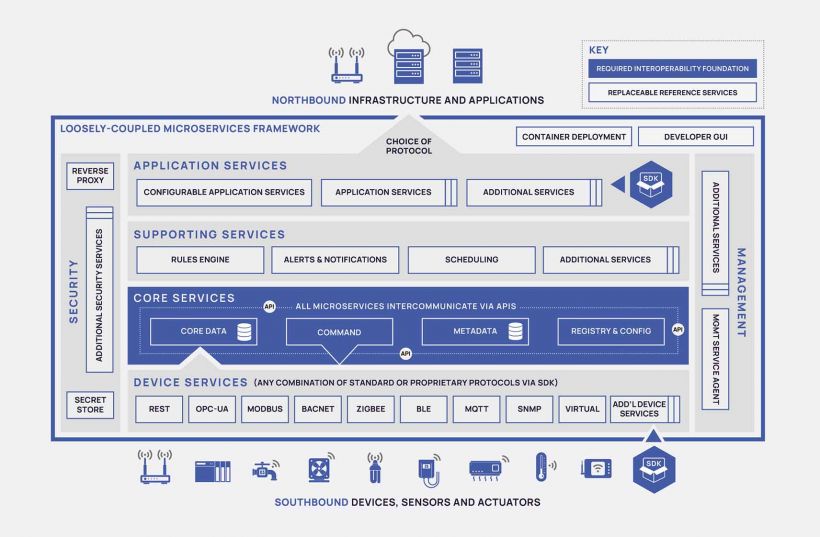

EdgeX uses a microservices architecture with four service layers and two system services, focusing on interoperability, flexibility, functionality, robustness, performance, and security. This design enables operation across diverse hardware while maintaining deployment adaptibility.

The four service layers include:

- Core Services: The foundation containing device information, data flows, and configuration. Provides data storage, command capabilities, device metadata, and registry services. Acts as the central nervous system connecting edge devices to IT systems.

- Supporting Services: Handles analytics, scheduling, and data cleanup. Includes rule engines for edge-based actions, operation schedulers, and notification systems, enhancing local data processing.

- Application Services: Manages data extraction, transformation, and transmission to external services like cloud providers. Enables event-driven processing for operations like encoding and compression.

- Device Services: Interfaces with physical devices through their native protocols converting device-specific data to standardized EdgeX formats. Supports MQTT, BACnet, Modbus, and other protocols.

Two system services complement these layers:

- Security: Implements secure storage for sensitive information and includes a reverse proxy to restrict access to REST resources.

- System Management: Provides centralized control for operations like starting/stopping services monitoring health, and collecting metrics.

EdgeX has achieved high implementation maturity with regular releases and patches. It's modular design allows component customization, driving adoption across industries, particularly industrial IoT applications requiring device interoperability.

EdgeX addresses resource constraints through flexible deployment models, running as Docker containers with various orchestration methods. The framework includes virtual devices for testing without physical hardware. Its loosely coupled services organized in layers can run on any hardware/OS, supporting both x86 and ARM processors, though this comprehensive architecture requires more resources than lighter frameworks and presents a steeper learning curve for new developpers.

Efficiency is enhanced through intelligent command routing and support for data export to cloud environments via configurable exporters, balancing local processing with cloud integration. This approach enables edge-based decision making without cloud connectivity, though the microservices approach may introduce some delays compared to more tightly integrated solutions.

Developers primarily interact with Application ("north side") and Device ("south side") service layers, using specialized SDKs that handle interconnection details, allowing focus on application code. While this simplifies development, optimal deployment typically requires containerization expertise, and configuration may require significant optimization effort.

EdgeX Foundry works well in diverse scenarios including industrial IoT (factory equipment monitoring across protocols), building automation (unified management of systems), retail environments (processing point-of-sale data), energy management (usage monitoring and optimization), smart cities (local processing of urban infrastructure data, and medical device integration (standardizing healthcare device data).

The framework offers significant advantages through its vendor neutrality (community-maintained to avoid lock-in), interoperability (bridging protocols, hardware platforms, and cloud systems), modularity (independent component customization), and deployment flexibility (supporting containers, pods, or binaries). An active community ensures ongoing development and regular releases, while ready-to-use components support customization options. The system maintains operation during network outages with data buffering, though documentation may not cover all integration senarios.

KubeEdge

KubeEdge is an open-source edge computing platform that extends Kubernetes containerized application orchestration to edge nodes [9]. Originally created by Huawei and donated to the Cloud Native Computing Foundation (CNCF) in 2019, it has gained signficant traction among organizations already using Kubernetes. It works well for smart manufacturing, smart city applications, and edge machine learning inference.

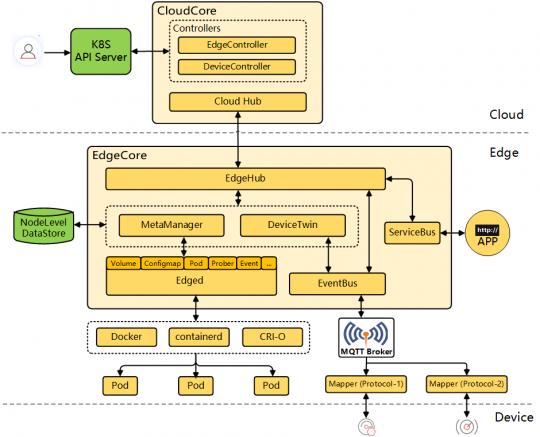

KubeEdge's architecture consists of two primary components:

- Cloud Part: Includes KubeEdge CloudCore and the Kubernetes API server, managing node and pod metadata, making deployment decisions, and publishing them to edge nodes. It provides unified management through standard Kubernetes interfaces.

- Edge Part: Consists of EdgeCore running directly on edge devices, managing containerized applications, synchronizing with the cloud, and operating autonomously during disconnections. It handles computing workloads, network operations, device twin management, and message routing.

KubeEdge maintains compatibility with Kubernetes primitives while introducing edge-specific optimizations, enabling consistent deployment practices across infrastructure. It features a lightweight footprint optimized for edge devices, using significantly less resources than standard Kubernetes nodes while preserving functionality. Edge nodes function independently during cloud disconnections through local data storage and processing, resynchronizing when connectivity returns. The framework provides comprehensive API and controller for manging IoT devices connected to edge nodes, making it valuable for deployments with numerous different devices. Security is implemented through certificate-based authentication between components and TLS encryption for data in transit, protecting distributed edge infrastructure. KubeEdge processes data locally and transmits only relevant information to the cloud, improving response times for time-sensitive operations.

Despite its advantages, KubeEdge comes with several limitations to consider. It requires knowledge of Kubernetes concepts, presenting a learning curve for teams without prior experience with container orchestration. The framework also needs an existing Kubernetes control plane, which adds infrastructure requirements. While more lightweight than standard Kubernetes, KubeEdge demands more resources than some alternative edge frameworks potentially making it unsuitable for highly constrained devices. Its comprehensive feature set may be overly complex for simple edge deployments where a more streamlined solution would suffice. Additionally, as a relatively young project compared to some alternatives, its ecosystem and tooling continue to evolve, which may impact long-term stability and support options?

Open Source Standards

While previous sections focused on software implementation frameworks, open standards maintained by communities like the Linux Foundation are equally important. These standards define system and architectural-level details at a higher level than specific software implementions.

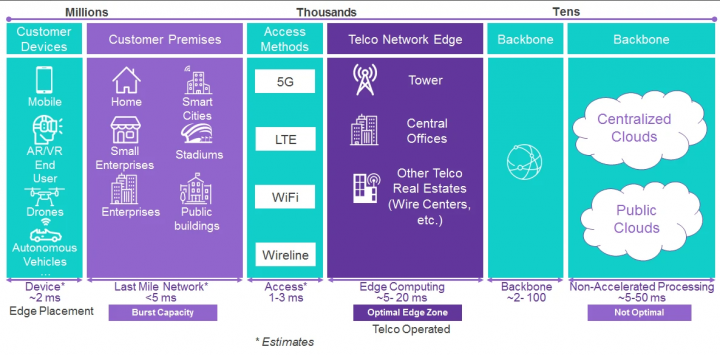

One such standard is Multi-Access Edge Computing (MEC), a network architecture that positions computing resources closer to end users, typically within or adjacent to cellular base stations and access points, primarily to reduce latency through localized processing.

The European Telecommunications Standards Institute (ETSI) established MEC in 2014 to support emerging edge technology development [11]. Frameworks like KubeEdge and EdgeX Foundry operate within the MEC paradigm but focus on application deployment rather than modifying underlying network architecture -- they leverage existing infrastructure without fundamentally altering it.

Several open source initiatives support MEC implementations:

- Open Network Automation Platform (ONAP): A Linux Foundation platform for orchestrating network functions, including edge computing resources, enabling telecom providers to automate service management.

- O-RAN Alliance: Promotes openness in radio access networks, supporting edge computing through open interfaces and virtualized network elements.

- OpenAirInterface (OAI): Provides standardized implementation of 4G/5G networks, enabling experimental deployments on commodity hardware.

These projects address different aspects of the telecommunications stack, creating flexible edge computing deployments that reduce dependence on proprietary implementations.

Research suggests future 6G networks will require more flexible MEC architectures based on network function disaggregation and dynamic reconfiguration. Open interfaces will be critical for enabling multi-vendor deployments, while standardized APIs will facilitate ecosystem development around MEC platforms.

The advancement of open source MEC solutions promises to accelerate innovation by broadening developer contributions to telecommunications edge computing, potentially reducing costs while increasing flexibility and adoption.

Akraino

Akraino Edge Stack takes a different approach to open source edge computing by focusing on architectural blueprints rather than specific software implementations [10]. Launched in 2018 under the Linux Foundation with AT&T's initial contribution, it quickly attracted industry partners including Nokia, Intel, Arm, and Ericsson.

The core concept in Akraino is the "blueprint" -- a validated configuration of hardware and software components designed for specific edge computing use cases. Blueprints include hardware specifications, software components, and deployment instructions tailored to particular scenarios. This approach represents a middle ground between rigid standardization and custom implementations, providing guidance without mandating vendor-specific soultions.

Akraino's development process progresses from proposal through validation. New blueprints begin as proposals outlining use cases and requirements, advance to development where components are integrated, and undergo extensive testing before release to verify functionality, performance, and security. This rigorous validation ensures blueprints represent production-ready architectural patterns.

While designed to support any access methodology, Akraino is primarily used in telecommunications (4G LTE and 5G) with placement of edge devices based on specific requirements.

Akraino offers distinct advantages through its architectural focus, validated configurations reduce implementation risk, specific use case targeting balances standardization with flexibility, and rigorous testing ensures production readiness. However, limitations exist compared to implementation frameworks: blueprints require significant work to become functional systems, demand substantial technical expertise may specify hardware configurations limiting flexibility, and vary in maturity.

Akraino complements implementation frameworks like EdgeX Foundry and KubeEdge in the open source edge computing ecosystem. While these provide deployable software components Akraino offers architectural guidance for integrating them into cohesive systems. As edge computing matures, Akraino's approach will likely play an increasingly important role in standardizing architectures while enabling innovation.

References

- “Core Concepts: Getting Started: Eclipse Iofog.” Getting Started | Eclipse ioFog, iofog.org/docs/2/getting-started/core-concepts.html. Accessed 5 Apr. 2025.

- Apache NiFi Team. Apache Nifi Overview, nifi.apache.org/nifi-docs/overview.html. Accessed 5 Apr. 2025.

- Dominicbetts. “Introduction to the Azure Internet of Things (IOT) - Azure Iot.” Introduction to the Azure Internet of Things (IoT) - Azure IoT | Microsoft Learn, learn.microsoft.com/en-us/azure/iot/iot-introduction. Accessed 5 Apr. 2025.

- “Introduction: OpenYurt.” OpenYurt RSS, 5 Apr. 2025, openyurt.io/docs/.

- “Welcome to the Eclipse KuraTM Documentation.” Eclipse KuraTM Documentation, eclipse-kura.github.io/kura/docs-release-5.6/. Accessed 5 Apr. 2025.

- Edge, LF, and By. “What Is Baetyl?” LF EDGE: Building an Open Source Framework for the Edge., Linux Foundation, 4 June 2020, lfedge.org/what-is-baetyl/.

- “Welcome to the STARLINGX Documentation.” Welcome to the StarlingX Documentation - StarlingX Documentation, docs.starlingx.io/. Accessed 5 Apr. 2025.

- Johanson, Michael. “EdgeX Foundry Overview.” Overview - EdgeX Foundry Documentation, docs.edgexfoundry.org/4.0/. Accessed 5 Apr. 2025.

- “Why Kubeedge: Kubeedge.” KubeEdge RSS, kubeedge.io/docs/. Accessed 5 Apr. 2025.

- “Akraino.” LF EDGE: Building an Open Source Framework for the Edge., Linux Foundation, lfedge.org/projects/akraino/. Accessed 5 Apr. 2025.

- P. Porambage, J. Okwuibe, M. Liyanage, M. Ylianttila and T. Taleb, "Survey on Multi-Access Edge Computing for Internet of Things Realization," in IEEE Communications Surveys & Tutorials, vol. 20, no. 4, pp. 2961-2991, Fourthquarter 2018, doi: 10.1109/COMST.2018.2849509.

- J. John, A. Ghosal, T. Margaria and D. Pesch, "DSLs for Model Driven Development of Secure Interoperable Automation Systems with EdgeX Foundry," 2021 Forum on specification & Design Languages (FDL), Antibes, France, 2021, pp. 1-8, doi: 10.1109/FDL53530.2021.9568378.

- V. Villali, S. Bijivemula, S. L. Narayanan, T. Mohana Venkata Prathusha, M. S. Krishna Sri and A. Khan, "Open-source Solutions for Edge Computing," 2021 2nd International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 2021, pp. 1185-1193, doi: 10.1109/ICOSEC51865.2021.9591859.

3.3 Serverless at the Edge

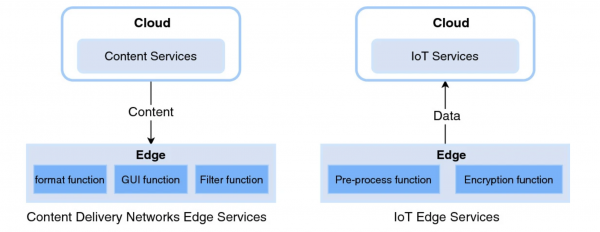

Edge computing helps process data closer to where it was created, which then helps to reduce delays, lower costs, and improve privacy. Serverless computing is also becoming more popular as it lets developers run small pieces of code without managing servers, reducing complexity. Combining these two concepts is powerful, especially for things like smart devices, real-time applications, and AI. We will focus on the ways researchers and companies are using serverless technology at the edge. Some focus on helping developers work faster, others on improving performance, and some on making smarter devices. We’ll look at a few main types: a research platform, content delivery networks, and Internet of Things (IoT) platforms.

IBM Research – Deviceless Serverless Platform for Edge AI This research prototype proposes a serverless edge AI platform that allows AI workflows across cloud and edge resources. The research introduces a deviceless approach where edge nodes are abstracted as cluster resources, thus removing the need for developers to manage specific devices manually. The serverless programming model includes datasets, ML models, runtime triggers, and latency deadlines.

An example of how this model was used was in personal health assistants that collected sensitive biosensor data. A base model is first trained in the cloud, then it is refined on a device using transfer learning. This ensures privacy and prompt responses. Another example includes field technicians equipped with AI-enhanced mobile tools that operate offline and sync with the cloud as needed.

CDN - Content Delivery Platforms Content network providers have introduced various serverless edge models, enabling the use of dynamic content and reduced latency. These platforms include industries like media, e-commerce, and other datas analytics. Cloudflare lets you run small pieces of code on its servers around the world. These are great for simple tasks like changing web pages or handling requests quickly. Amazon lets you run code at its edge locations using something called Lambda@Edge. This works with CloudFront and supports many programming languages. It’s useful for custom features that need to run close to users. IBM lets you run code close to users using a tool called Edge Functions. These use a system that runs lightweight programs to speed up how websites and apps respond to users.

IOT Platforms IoT platforms are made for devices like sensors, cameras, and other smart tools. These platforms help manage devices, run code locally, and handle real-time data. AWS IoT Greengrass allows developers to run the same functions they use in the cloud on local devices. It works even without network access and supports many types of hardware. Azure IoT Edge is a major contender in serverless platforms. Microsoft's platform is open-source and supports many operating systems and devices. It allows developers to run containerized functions on their own equipment and connect with Microsoft’s cloud tools. FogFlow decides where to run serverless functions based on data, location, and system rules. It helps smart services move and run on the best device depending on the specifics situation.

Serverless edge computing is still new, but it is growing fast. Most commercial platforms are tied to their cloud providers, which can make switching hard. Open-source tools offer more freedom but need more setup. Also, tools for managing many edge devices and complex rules are still being developed. Even with the mentioned various challenges, serverless edge platforms give developers more ways to build fast, smart, and flexible applications. As the tools improve, it will become easier to create powerful systems that run wherever they’re needed.