Federated Learning: Difference between revisions

Idvsrevanth (talk | contribs) |

Idvsrevanth (talk | contribs) No edit summary |

||

| Line 35: | Line 35: | ||

== Aggregation Algorithms and Communication Efficiency == | == Aggregation Algorithms and Communication Efficiency == | ||

Aggregation is a fundamental operation in Federated Learning (FL), | Aggregation is a fundamental operation in Federated Learning (FL), where updates from multiple edge clients are merged to form a new global model. The quality, stability, and efficiency of the federated learning process depend heavily on the aggregation strategy employed. In edge environments—characterized by device heterogeneity and non-identical data distributions—choosing the right aggregation algorithm is essential to ensure reliable convergence and effective collaboration. | ||

=== Key Aggregation Algorithms === | |||

In | '''Federated Averaging (FedAvg):''' | ||

FedAvg is the foundational aggregation method introduced in the early development of FL. In this approach, each client performs several local training epochs and sends its updated model parameters to the server. The server then computes a weighted average of all received models, where the weight of each client’s contribution is proportional to the size of its local dataset. FedAvg is efficient and reduces communication frequency but can struggle under data heterogeneity and uneven client participation [1][2]. | |||

'''Federated Proximal (FedProx):''' | |||

FedProx extends FedAvg by introducing a proximal term to the local training objective. This term discourages large deviations of a client’s model from the current global model. As a result, clients are guided to remain closer to the shared model, which improves stability when data is non-IID or when clients have limited resources. FedProx is particularly useful in scenarios where some devices have noisy, sparse, or highly skewed datasets, or cannot complete full local training rounds consistently [2]. | |||

'''Federated Optimization (FedOpt):''' | |||

FedOpt is a broader family of algorithms that generalize aggregation by applying adaptive optimization techniques at the server side. These include optimizers such as FedAdam, FedYogi, and FedAdagrad, which use concepts like momentum, adaptive learning rates, and gradient history to enhance convergence. FedOpt methods help mitigate the slow or unstable convergence often seen in FL with diverse clients, enabling faster and more consistent model improvements [3]. | |||

=== Communication Efficiency in Edge-Based FL === | |||

Communication remains one of the most critical bottlenecks in deploying FL at the edge, where devices often suffer from limited bandwidth, intermittent connectivity, and energy constraints. To address this, several strategies have been developed. **Gradient quantization** reduces the size of transmitted updates by lowering numerical precision (e.g., from 32-bit to 8-bit values). **Gradient sparsification** limits communication to only the most significant changes in the model, transmitting top-k updates while discarding negligible ones. **Local update batching** allows devices to perform multiple rounds of local training before sending updates, reducing the frequency of synchronization. | |||

Further, **client selection strategies** dynamically choose a subset of devices to participate in each round, based on criteria like availability, data quality, hardware capacity, or trust level. These communication optimizations are crucial for ensuring that FL remains scalable, efficient, and deployable across millions of edge nodes without overloading the network or draining device batteries [1][2][3]. | |||

Revision as of 21:15, 2 April 2025

Overview and Motivation

Federated Learning (FL) is a decentralized machine learning paradigm that enables multiple edge devices referred to as clients to collaboratively train a shared model without transferring their private data to a central location. Each client performs local training using its own dataset and communicates only model updates (such as gradients or weights) to an orchestrating server or aggregator. These updates are then aggregated to produce a new global model that is redistributed to the clients for further training. This process continues iteratively, allowing the model to learn from distributed data sources while preserving the privacy and autonomy of each client. By design, FL shifts the focus from centralized data collection to collaborative model development, introducing a new direction in scalable, privacy-preserving machine learning [1].

The motivation for Federated Learning arises from growing concerns around data privacy, security, and communication efficiency particularly in edge computing environments where data is generated in massive volumes across geographically distributed and often resource-constrained devices. Centralized learning architectures struggle in such contexts due to limited bandwidth, high transmission costs, and strict regulatory frameworks such as the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA). FL inherently mitigates these issues by allowing data to remain on-device, thereby minimizing the risk of data exposure and reducing reliance on constant connectivity to cloud services. Furthermore, by exchanging only lightweight model updates instead of full datasets, FL significantly decreases communication overhead, making it well-suited for real-time learning in mobile and edge networks [2].

Within the broader ecosystem of edge computing, FL represents a paradigm shift that enables distributed intelligence under conditions of partial availability, device heterogeneity, and non-identically distributed (non-IID) data. Clients in FL systems can participate asynchronously, tolerate network interruptions, and adapt their computational loads based on local capabilities. This flexibility is particularly important in edge scenarios where devices may differ in processor power, battery life, and storage. Moreover, FL supports the development of personalized and locally adapted models through techniques such as federated personalization and clustered aggregation. These properties make FL not only an effective solution for collaborative learning at the edge but also a foundational approach for building scalable, secure, and trustworthy AI systems that are aligned with emerging demands in distributed computing and privacy-preserving technologies [1][2][3].

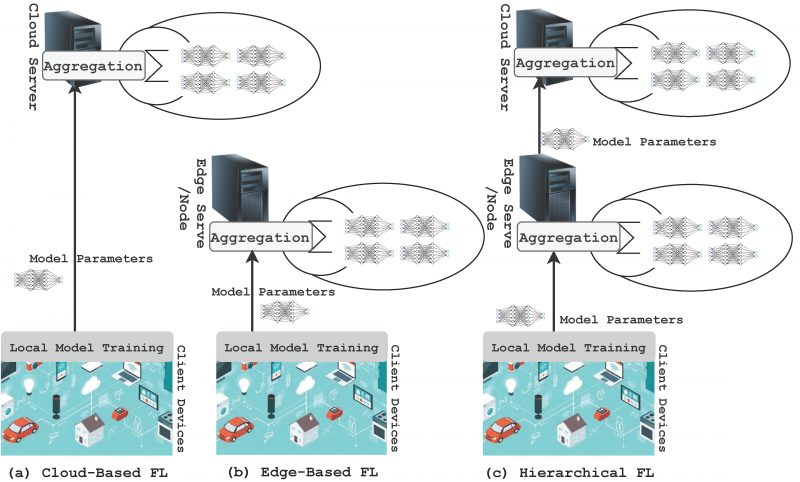

Federated Learning Architectures

Federated Learning (FL) can be implemented through various architectural configurations, each defining how clients interact, how updates are aggregated, and how trust and responsibility are distributed. These architectures play a central role in determining the scalability, fault tolerance, communication overhead, and privacy guarantees of a federated system. In edge computing environments, where client devices are heterogeneous and network reliability varies, the choice of architecture significantly affects the efficiency and robustness of learning. The three dominant paradigms are centralized, decentralized, and hierarchical architectures. Each of these approaches balances different trade-offs in terms of coordination complexity, system resilience, and resource allocation.

Centralized Architecture

In the centralized FL architecture, a central server or cloud orchestrator is responsible for all coordination, aggregation, and distribution activities. The server begins each round by broadcasting a global model to a selected subset of client devices, which then perform local training using their private data. After completing local updates, clients send their modified model parameters usually in the form of weight vectors or gradients back to the server. The server performs aggregation, typically using algorithms such as Federated Averaging (FedAvg), and sends the updated global model to the clients for the next round of training.

The centralized model is appealing for its simplicity and compatibility with existing cloud to client infrastructures. It is relatively easy to deploy, manage, and scale in environments with stable connectivity and limited client churn. However, its reliance on a single server introduces critical vulnerabilities. The server becomes a bottleneck under high communication loads and a single point of failure if it experiences downtime or compromise. Furthermore, this architecture requires clients to trust the central aggregator with metadata, model parameters, and access scheduling. In privacy-sensitive or high availability contexts, these limitations can restrict centralized FL’s applicability [1].

Decentralized Architecture

Decentralized FL removes the need for a central server altogether. Instead, client devices interact directly with each other to share and aggregate model updates. These peer-to-peer (P2P) networks may operate using structured overlays, such as ring topologies or blockchain systems, or employ gossip-based protocols for stochastic update dissemination. In some implementations, clients collaboratively compute weighted averages or perform federated consensus to update the global model in a distributed fashion.

This architecture significantly enhances system robustness, resilience, and trust decentralization. There is no single point of failure, and the absence of a central coordinator eliminates risks of aggregator bias or compromise. Moreover, decentralized FL supports federated learning in contexts where participants belong to different organizations or jurisdictions and cannot rely on a neutral third party. However, these benefits come at the cost of increased communication overhead, complex synchronization requirements, and difficulties in managing convergence, especially under non-identical data distributions and asynchronous updates. Protocols for secure communication, update verification, and identity authentication are necessary to prevent malicious behavior and ensure model integrity. Due to these complexities, decentralized FL is an active area of research and is best suited for scenarios requiring strong autonomy and fault tolerance [2].

Hierarchical Architecture

Hierarchical FL is a hybrid architecture that introduces one or more intermediary layers—often called edge servers or aggregators between clients and the global coordinator. In this model, clients are organized into logical or geographical groups, with each group connected to an edge server. Clients send their local model updates to their respective edge aggregator, which performs preliminary aggregation. The edge servers then send their aggregated results to the cloud server, where final aggregation occurs to produce the updated global model.

This multi-tiered architecture is designed to address the scalability and efficiency challenges inherent in centralized systems while avoiding the coordination overhead of full decentralization. Hierarchical FL is especially well-suited for edge computing environments where data, clients, and compute resources are distributed across structured clusters, such as hospitals within a healthcare network or base stations in a telecommunications infrastructure.

One of the key advantages of hierarchical FL is communication optimization. By aggregating locally at edge nodes, the amount of data transmitted over wide-area networks is significantly reduced. Additionally, this model supports region-specific model personalization by allowing edge servers to maintain specialized sub-models adapted to local client behavior. Hierarchical FL also enables asynchronous and fault-tolerant training by isolating disruptions within specific clusters. However, this architecture still depends on reliable edge aggregators and introduces new challenges in cross-layer consistency, scheduling, and privacy preservation across multiple tiers [1][3].

Aggregation Algorithms and Communication Efficiency

Aggregation is a fundamental operation in Federated Learning (FL), where updates from multiple edge clients are merged to form a new global model. The quality, stability, and efficiency of the federated learning process depend heavily on the aggregation strategy employed. In edge environments—characterized by device heterogeneity and non-identical data distributions—choosing the right aggregation algorithm is essential to ensure reliable convergence and effective collaboration.

Key Aggregation Algorithms

Federated Averaging (FedAvg): FedAvg is the foundational aggregation method introduced in the early development of FL. In this approach, each client performs several local training epochs and sends its updated model parameters to the server. The server then computes a weighted average of all received models, where the weight of each client’s contribution is proportional to the size of its local dataset. FedAvg is efficient and reduces communication frequency but can struggle under data heterogeneity and uneven client participation [1][2].

Federated Proximal (FedProx): FedProx extends FedAvg by introducing a proximal term to the local training objective. This term discourages large deviations of a client’s model from the current global model. As a result, clients are guided to remain closer to the shared model, which improves stability when data is non-IID or when clients have limited resources. FedProx is particularly useful in scenarios where some devices have noisy, sparse, or highly skewed datasets, or cannot complete full local training rounds consistently [2].

Federated Optimization (FedOpt): FedOpt is a broader family of algorithms that generalize aggregation by applying adaptive optimization techniques at the server side. These include optimizers such as FedAdam, FedYogi, and FedAdagrad, which use concepts like momentum, adaptive learning rates, and gradient history to enhance convergence. FedOpt methods help mitigate the slow or unstable convergence often seen in FL with diverse clients, enabling faster and more consistent model improvements [3].

Communication Efficiency in Edge-Based FL

Communication remains one of the most critical bottlenecks in deploying FL at the edge, where devices often suffer from limited bandwidth, intermittent connectivity, and energy constraints. To address this, several strategies have been developed. **Gradient quantization** reduces the size of transmitted updates by lowering numerical precision (e.g., from 32-bit to 8-bit values). **Gradient sparsification** limits communication to only the most significant changes in the model, transmitting top-k updates while discarding negligible ones. **Local update batching** allows devices to perform multiple rounds of local training before sending updates, reducing the frequency of synchronization.

Further, **client selection strategies** dynamically choose a subset of devices to participate in each round, based on criteria like availability, data quality, hardware capacity, or trust level. These communication optimizations are crucial for ensuring that FL remains scalable, efficient, and deployable across millions of edge nodes without overloading the network or draining device batteries [1][2][3].