Federated Learning: Difference between revisions

Idvsrevanth (talk | contribs) |

Ram229kumar (talk | contribs) No edit summary |

||

| (115 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

= | = 5.1 Overview and Motivation = | ||

'''Federated Learning (FL)''' is a decentralized approach to machine learning where many edge devices—called clients—work together to train a shared model ''without'' sending their private data to a central server. Each client trains the model locally using its own data and then sends only the model updates (like gradients or weights) to a central server, often called an '''aggregator'''. The server combines these updates to create a new global model, which is then sent back to the clients for the next round of training. This cycle repeats, allowing the model to improve while keeping the raw data on the devices. FL shifts the focus from central data collection to collaborative training, supporting privacy and scalability in machine learning systems.<sup>[1]</sup> | |||

The main reason for using FL is to address concerns about data privacy, security, and communication efficiency—especially in edge computing, where huge amounts of data are created across many different, often limited, devices. Centralized learning struggles here due to limited bandwidth, high data transfer costs, and strict privacy regulations like the '''General Data Protection Regulation (GDPR)''' and the '''Health Insurance Portability and Accountability Act (HIPAA)'''. FL helps solve these problems by keeping data on the device, reducing the risk of leaks and the need for constant cloud connectivity. It also lowers communication costs by sharing small model updates instead of full datasets, making it ideal for real-time learning in mobile and edge networks.<sup>[2]</sup> | |||

In edge computing, FL is a major shift in how we do machine learning. It supports distributed intelligence even when devices have limited resources, unreliable connections, or very different types of data ('''non-IID'''). Clients can join training sessions at different times, work around network delays, and adjust based on their hardware limitations. This makes FL a flexible option for edge environments with varying battery levels, processing power, and storage. FL can also support personalized models using techniques like '''federated personalization''' or '''clustered aggregation'''. Overall, it provides a strong foundation for building AI systems that are scalable, private, and better suited for the challenges of modern distributed computing.<sup>[1]</sup><sup>[2]</sup><sup>[3]</sup> | |||

= 5.2 Federated Learning Architectures = | |||

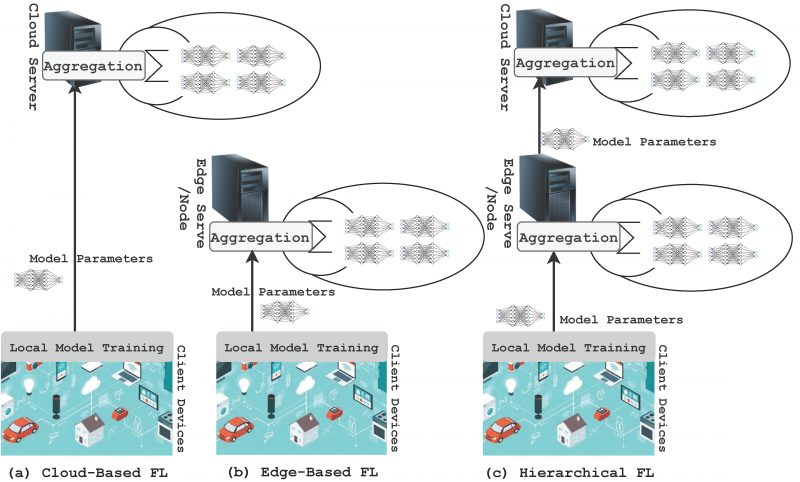

Federated Learning (FL) can be implemented through various architectural configurations, each defining how clients interact, how updates are aggregated, and how trust and responsibility are distributed. These architectures play a central role in determining the scalability, fault tolerance, communication overhead, and privacy guarantees of a federated system. In edge computing environments, where client devices are heterogeneous and network reliability varies, the choice of architecture significantly affects the efficiency and robustness of learning. The three dominant paradigms are centralized, decentralized, and hierarchical architectures. Each of these approaches balances different trade-offs in terms of coordination complexity, system resilience, and resource allocation. | |||

[[File:Architecture.png|thumb|center|800px|Visual comparison of Cloud-Based, Edge-Based, and Hierarchical Federated Learning architectures. Source: <sup>[1]</sup>]] | |||

=== 5.2.1 Centralized Architecture === | |||

In the centralized FL architecture, a central server or cloud orchestrator is responsible for all coordination, aggregation, and distribution activities. The server begins each round by broadcasting a global model to a selected subset of client devices, which then perform local training using their private data. After completing local updates, clients send their modified model parameters—usually in the form of weight vectors or gradients—back to the server. The server performs aggregation, typically using algorithms such as Federated Averaging (FedAvg), and sends the updated global model to the clients for the next round of training. | |||

The centralized model is appealing for its simplicity and compatibility with existing cloud-to-client infrastructures. It is relatively easy to deploy, manage, and scale in environments with stable connectivity and limited client churn. However, its reliance on a single server introduces critical vulnerabilities. The server becomes a bottleneck under high communication loads and a single point of failure if it experiences downtime or compromise. Furthermore, this architecture requires clients to trust the central aggregator with metadata, model parameters, and access scheduling. In privacy-sensitive or high-availability contexts, these limitations can restrict centralized FL’s applicability.<sup>[1]</sup> | |||

=== 5.2.2 Decentralized Architecture === | |||

Decentralized FL removes the need for a central server altogether. Instead, client devices interact directly with each other to share and aggregate model updates. These peer-to-peer (P2P) networks may operate using structured overlays, such as ring topologies or blockchain systems, or employ gossip-based protocols for stochastic update dissemination. In some implementations, clients collaboratively compute weighted averages or perform federated consensus to update the global model in a distributed fashion. | |||

This architecture significantly enhances system robustness, resilience, and trust decentralization. There is no single point of failure, and the absence of a central coordinator eliminates risks of aggregator bias or compromise. Moreover, decentralized FL supports federated learning in contexts where participants belong to different organizations or jurisdictions and cannot rely on a neutral third party. However, these benefits come at the cost of increased communication overhead, complex synchronization requirements, and difficulties in managing convergence—especially under non-identical data distributions and asynchronous updates. Protocols for secure communication, update verification, and identity authentication are necessary to prevent malicious behavior and ensure model integrity. Due to these complexities, decentralized FL is an active area of research and is best suited for scenarios requiring strong autonomy and fault tolerance.<sup>[2]</sup> | |||

=== 5.2.3 Hierarchical Architecture === | |||

In | Hierarchical FL is a hybrid architecture that introduces one or more intermediary layers—often called edge servers or aggregators—between clients and the global coordinator. In this model, clients are organized into logical or geographical groups, with each group connected to an edge server. Clients send their local model updates to their respective edge aggregator, which performs preliminary aggregation. The edge servers then send their aggregated results to the cloud server, where final aggregation occurs to produce the updated global model. | ||

This multi-tiered architecture is designed to address the scalability and efficiency challenges inherent in centralized systems while avoiding the coordination overhead of full decentralization. Hierarchical FL is especially well-suited for edge computing environments where data, clients, and compute resources are distributed across structured clusters, such as hospitals within a healthcare network or base stations in a telecommunications infrastructure. | |||

One of the key advantages of hierarchical FL is communication optimization. By aggregating locally at edge nodes, the amount of data transmitted over wide-area networks is significantly reduced. Additionally, this model supports region-specific model personalization by allowing edge servers to maintain specialized sub-models adapted to local client behavior. Hierarchical FL also enables asynchronous and fault-tolerant training by isolating disruptions within specific clusters. However, this architecture still depends on reliable edge aggregators and introduces new challenges in cross-layer consistency, scheduling, and privacy preservation across multiple tiers.<sup>[1]</sup><sup>[3]</sup> | |||

= 5.3 Aggregation Algorithms and Communication Efficiency = | |||

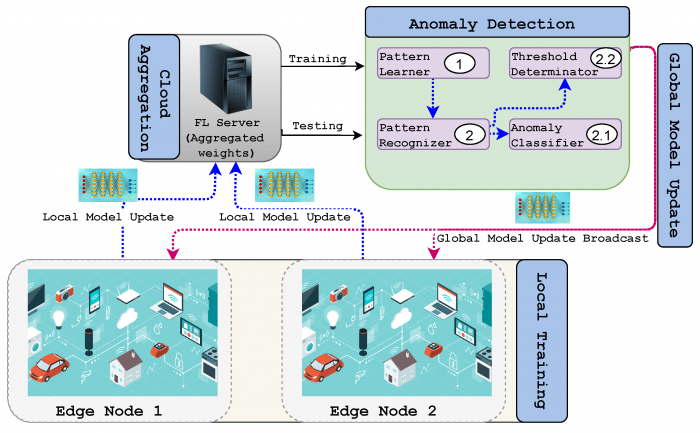

Aggregation is a fundamental operation in Federated Learning (FL), where updates from multiple edge clients are merged to form a new global model. The quality, stability, and efficiency of the FL process depend heavily on the aggregation strategy employed. In edge environments characterized by device heterogeneity and non-identical data distributions, choosing the right aggregation algorithm is essential to ensure reliable convergence and effective collaboration. | |||

[[File:Aggregation.png|thumb|center|700px|Federated Learning protocol showing client selection, local training, model update, and aggregation. Source: Adapted from Federated Learning in Edge Computing: A Systematic Survey<sup>[1]</sup>]] | |||

== 5.3.1 Key Aggregation Algorithms == | |||

{| class="wikitable" | {| class="wikitable" | ||

|+ | |+ Comparison of Aggregation Algorithms in Federated Learning | ||

! | ! Algorithm !! Description !! Handles Non-IID Data !! Server-Side Optimization !! Typical Use Case | ||

|- | |- | ||

| | | FedAvg || Performs weighted averaging of client models based on dataset size. Simple and communication-efficient. || Limited || No || Basic federated setups with IID or mildly non-IID data. | ||

|- | |- | ||

| | | FedProx || Adds a proximal term to the local loss function to prevent client drift. Stabilizes training with diverse data. || Yes || No || Suitable for edge deployments with high data heterogeneity or resource-limited clients. | ||

|- | |- | ||

| | | FedOpt || Applies adaptive optimizers (e.g., FedAdam, FedYogi) on aggregated updates. Enhances convergence in dynamic systems. || Yes || Yes || Used in large-scale systems or settings with unstable participation and gradient variability. | ||

|} | |} | ||

For | Aggregation is the cornerstone of FL, where locally computed model updates from edge devices are combined into a global model. The most widely adopted aggregation method is Federated Averaging (FedAvg), introduced in the foundational work by McMahan et al.<sup>[1]</sup> FedAvg operates by averaging model parameters received from participating clients, typically weighted by the size of each client’s local dataset. This simple yet powerful method reduces the frequency of communication by allowing each device to perform multiple local updates before sending gradients to the server. However, FedAvg performs optimally only when data across clients is balanced and independent and identically distributed (IID)—conditions rarely satisfied in edge computing environments, where client datasets are often highly non-IID, sparse, or skewed.<sup>[2]</sup> | ||

To address these limitations, several advanced aggregation algorithms have been proposed. One notable extension is FedProx, which modifies the local optimization objective by adding a proximal term that penalizes large deviations from the global model. This constrains local training and improves stability in heterogeneous data scenarios. FedProx also allows flexible participation by clients with limited resources or intermittent connectivity, making it more robust in practical edge deployments. | |||

Another family of aggregation algorithms is FedOpt, which includes adaptive server-side optimization techniques such as FedAdam and FedYogi. These algorithms build on optimization methods used in centralized training and apply them at the aggregation level, enabling faster convergence and improved generalization under complex, real-world data distributions. Collectively, these variants of aggregation address critical FL challenges such as slow convergence, client drift, and update divergence due to heterogeneity in both data and device capabilities.<sup>[1]</sup><sup>[3]</sup> | |||

== 5.3.2 Communication Efficiency in Edge-Based FL == | |||

Communication remains one of the most critical bottlenecks in deploying FL at the edge, where devices often suffer from limited bandwidth, intermittent connectivity, and energy constraints. To address this, several strategies have been developed: | |||

* '''Gradient quantization''': Reduces the size of transmitted updates by lowering numerical precision (e.g., from 32-bit to 8-bit values). | |||

* '''Gradient sparsification''': Limits communication to only the most significant changes in the model, transmitting top-k updates while discarding negligible ones. | |||

* '''Local update batching''': Allows devices to perform multiple rounds of local training before sending updates, reducing the frequency of synchronization. | |||

Further, client selection strategies dynamically choose a subset of devices to participate in each round, based on criteria like availability, data quality, hardware capacity, or trust level. These communication optimizations are crucial for ensuring that FL remains scalable, efficient, and deployable across millions of edge nodes without overloading the network or draining device batteries.<sup>[1]</sup><sup>[2]</sup><sup>[3]</sup> | |||

= 5.4 Privacy Mechanisms = | |||

Privacy and data confidentiality are central design goals of Federated Learning (FL), particularly in edge computing scenarios where numerous IoT devices (e.g., hospital servers, autonomous vehicles) gather sensitive data. Although FL does not require the raw data to leave each client’s device, model updates can still leak private information or be correlated to individual data points. To address these challenges, various privacy-preserving mechanisms have been proposed in the literature.<sup>[1]</sup><sup>[2]</sup><sup>[3]</sup> | |||

== 5.4.1 Differential Privacy (DP) == | |||

Differential Privacy (DP) is a formal framework ensuring that the model’s outputs (e.g., parameter updates) do not reveal individual records. In FL, DP often involves injecting calibrated noise into gradients or model weights on each client. This noise is designed so that the global model’s performance remains acceptable, yet attackers cannot reliably infer any single data sample’s presence in the training set. | |||

A step-by-step timeline of DP in an FL context can be summarized as follows: | |||

# Clients fetch the global model and compute local gradients. | |||

# Before transmitting gradients, clients add randomized noise to mask specific data patterns. | |||

# The central server aggregates the noisy gradients to produce a new global model. | |||

# Clients download the updated global model for further local training. | |||

By carefully tuning the “privacy budget” (ε and δ), DP can balance privacy against model utility.<sup>[1]</sup><sup>[4]</sup> | |||

== 5.4.2 Secure Aggregation == | |||

Secure Aggregation, or SecAgg, is a protocol that encrypts local updates before they are sent to the server, ensuring that only the aggregated result is revealed. A typical SecAgg workflow includes: | |||

# Each client randomly splits its model updates into multiple shares. | |||

# These shares are exchanged among clients and the server in a way that no single party sees the entirety of any update. | |||

# The server only obtains the sum of all client updates, rather than individual parameters. | |||

This approach can thwart internal adversaries who might try to reconstruct local data from raw updates.<sup>[2]</sup> SecAgg is crucial for preserving confidentiality, especially in IoT-based FL systems where data privacy regulations (such as GDPR and HIPAA) prohibit raw data exposure. | |||

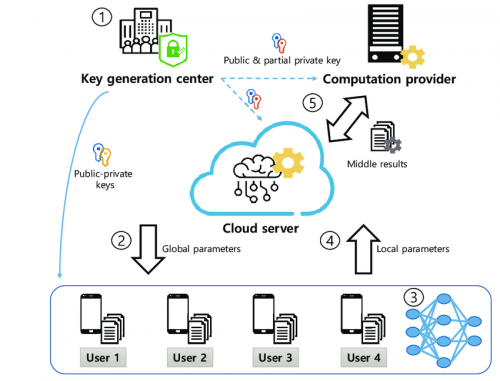

== 5.4.3 Homomorphic Encryption and SMPC == | |||

Homomorphic Encryption (HE) supports computations on encrypted data without the need for decryption. In FL, a homomorphically encrypted gradient can be aggregated securely by the server, preventing it from seeing cleartext updates. This approach, however, introduces higher computational overhead, which can be burdensome for resource-limited IoT edge devices.<sup>[3]</sup> | |||

Secure Multi-Party Computation (SMPC) is a related set of techniques that enables multiple parties to perform joint computations on secret inputs. In the context of FL, SMPC allows clients to compute sums of model updates without revealing individual updates. Although performance optimizations exist, SMPC remains challenging for large-scale models with millions of parameters.<sup>[1]</sup><sup>[5]</sup> | |||

== 5.4.4 IoT-Specific Considerations == | |||

In edge computing, IoT devices often capture highly sensitive data (patient records, vehicle sensor logs, etc.). Privacy measures must therefore operate seamlessly on low-power hardware while accommodating intermittent connectivity. For instance, a smart healthcare device storing patient records may use DP-based local training and SecAgg to encrypt updates before uploading. Meanwhile, an autonomous vehicle might adopt HE to guard sensor patterns relevant to real-time traffic analysis. | |||

Together, these techniques form a multi-layered privacy defense tailored for distributed, resource-constrained IoT ecosystems.<sup>[4]</sup><sup>[5]</sup> | |||

[[File:System-model-for-privacy-preserving-federated-learning.png|thumb|center|500px|System model illustrating privacy-preserving federated learning using homomorphic encryption.<br>Adapted from Privacy-Preserving Federated Learning Using Homomorphic Encryption.]] | |||

= 5.5 Security Threats = | |||

While Federated Learning (FL) enhances data privacy by ensuring that raw data remains on edge devices, it introduces significant security vulnerabilities due to its decentralized design and reliance on untrusted participants. In edge computing environments, where clients often operate with limited computational power and over unreliable networks, these threats are particularly pronounced. | |||

== 5.5.1 Model Poisoning Attacks == | |||

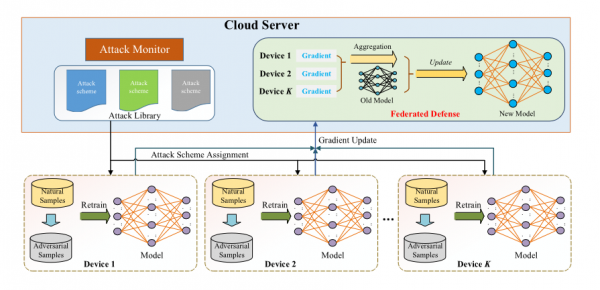

Model poisoning attacks are a critical threat in FL, especially in edge computing environments where the infrastructure is distributed and clients may be untrusted or loosely regulated. In this type of attack, malicious clients intentionally craft and submit harmful model updates during the training process to compromise the performance or integrity of the global model. These attacks are typically categorized as either untargeted—aimed at degrading general model accuracy—or targeted (backdoor attacks), where the global model is manipulated to behave incorrectly in specific scenarios while appearing normal in others. For instance, an attacker might train its local model with a backdoor trigger, such as a specific pixel pattern in an image, so that the global model misclassifies inputs containing that pattern, even though it performs well on standard test cases.<sup>[1]</sup><sup>[4]</sup> | |||

FL's reliance on aggregation algorithms like Federated Averaging (FedAvg), which simply compute the average of local updates, makes it susceptible to these attacks. Since raw data is never shared, poisoned updates can be hard to detect—especially in non-IID settings where variability is expected. Robust aggregation techniques like Krum, Trimmed Mean, and Bulyan have been proposed to resist such manipulation by filtering or down-weighting outlier contributions. However, these algorithms often introduce computational and communication overheads that are impractical for edge devices with limited power and processing capabilities.<sup>[2]</sup><sup>[4]</sup> Furthermore, adversaries can design subtle attacks that mimic benign statistical patterns, making them indistinguishable from legitimate updates. Emerging research explores anomaly detection based on update similarity and trust scoring, yet these solutions face limitations when applied to large-scale or asynchronous FL deployments. Developing lightweight, real-time, and scalable defenses that remain effective under device heterogeneity and unreliable network conditions remains an unresolved challenge.<sup>[3]</sup><sup>[4]</sup> | |||

[[File:FL_IoT_Attack_Detection.png|thumb|center|600px|Federated Learning-based architecture for adversarial sample detection and defense in IoT environments. Adapted from Federated Learning for Internet of Things: A Comprehensive Survey.]] | |||

== 5.5.2 Data Poisoning Attacks == | |||

Data poisoning attacks target the integrity of FL by manipulating the training data on individual clients before model updates are generated. Unlike model poisoning, which corrupts the gradients or weights directly, data poisoning occurs at the dataset level—allowing adversaries to stealthily influence model behavior through biased or malicious data. This includes techniques such as label flipping, outlier injection, or clean-label attacks that subtly alter data without visible artifacts. Since FL assumes client data remains private and uninspected, such poisoned data can easily propagate harmful patterns into the global model, especially in non-IID edge scenarios.<sup>[2]</sup><sup>[3]</sup> | |||

Edge devices are especially vulnerable to this form of attack due to their limited compute and energy resources, which often preclude comprehensive input validation. The highly diverse and fragmented nature of edge data—like medical readings or driving behavior—makes it difficult to establish a clear baseline for anomaly detection. Defense strategies include robust aggregation (e.g., Median, Trimmed Mean), anomaly detection, and Differential Privacy (DP). However, these methods can reduce model accuracy or increase complexity.<sup>[1]</sup><sup>[4]</sup> There is currently no foolproof solution to detect data poisoning without violating privacy principles. As FL continues to be deployed in critical domains, mitigating these attacks while preserving user data locality and system scalability remains an open and urgent research challenge.<sup>[3]</sup><sup>[4]</sup> | |||

== 5.5.3 Inference and Membership Attacks == | |||

Inference attacks represent a subtle yet powerful class of threats in FL, where adversaries seek to extract sensitive information from shared model updates rather than raw data. These attacks exploit the iterative nature of FL training. By analyzing updates—especially in over-parameterized models—attackers can infer properties of the data or even reconstruct inputs. A key example is the membership inference attack, where an adversary determines if a specific data point was used in training. This becomes more effective in edge scenarios, where updates often correlate strongly with individual devices.<sup>[2]</sup><sup>[3]</sup> | |||

As model complexity increases, so does the risk of gradient-based information leakage. Small datasets on edge devices amplify this vulnerability. Attackers with access to multiple rounds of updates may perform gradient inversion to reconstruct training inputs. These risks are especially serious in sensitive fields like healthcare. Mitigations include Differential Privacy and Secure Aggregation, but both reduce accuracy or add system overhead.<sup>[4]</sup> Designing FL systems that preserve utility while protecting against inference remains a major ongoing challenge.<sup>[1]</sup><sup>[4]</sup> | |||

== 5.5.4 Sybil and Free-Rider Attacks == | |||

Sybil attacks occur when a single adversary creates multiple fake clients (Sybil nodes) to manipulate the FL process. These clients may collude to skew aggregation results or overwhelm honest participants. This is especially dangerous in decentralized or large-scale FL environments, where authentication is weak or absent.<sup>[1]</sup> Without strong identity verification, an attacker can inject numerous poisoned updates, degrading model accuracy or blocking convergence entirely. | |||

Traditional defenses like IP throttling or user registration may violate privacy principles or be infeasible at the edge. Cryptographic registration, proof-of-work, or client reputation scoring have been explored, but each has trade-offs. Clustering and anomaly detection can identify Sybil patterns, though adversaries may adapt their behavior to avoid detection.<sup>[4]</sup> | |||

Free-rider attacks involve clients that participate in training but contribute little or nothing—e.g., sending stale models or dummy gradients—while still downloading and benefiting from the global model. This undermines fairness and wastes resources, especially on networks where honest clients use real bandwidth and energy.<sup>[3]</sup> Mitigation strategies include contribution-aware aggregation and client auditing. | |||

== 5.5.5 Malicious Server Attacks == | |||

In classical centralized FL, the server acts as coordinator—receiving updates and distributing models. However, if compromised, the server becomes a major threat. It can perform inference attacks, drop honest client updates, tamper with model weights, or inject adversarial logic. This poses significant risk in domains like healthcare and autonomous systems.<sup>[1]</sup><sup>[3]</sup> | |||

Secure Aggregation protects client updates by encrypting them, ensuring only aggregate values are visible. Homomorphic Encryption allows encrypted computation, while SMPC enables privacy-preserving joint computation. However, all three approaches involve high computational or communication costs.<sup>[4]</sup> Decentralized or hierarchical architectures reduce single-point-of-failure risk, but introduce new challenges around trust coordination and efficiency.<sup>[2]</sup><sup>[4]</sup> | |||

= 5.6 Resource-Efficient Model Training = | |||

In Federated Learning (FL), especially within edge computing environments, resource-efficient model training is crucial due to the inherent limitations of edge devices, such as constrained computational power, limited memory, and restricted communication bandwidth. Addressing these challenges involves implementing strategies that optimize the use of local resources while maintaining the integrity and performance of the global model. Key approaches include model compression techniques, efficient communication protocols, and adaptive client selection methods. | |||

== 5.6.1 Model Compression Techniques == | |||

Model compression techniques are essential for reducing the computational and storage demands of FL models on edge devices. By decreasing the model size, these techniques facilitate more efficient local training and minimize the communication overhead during model updates. Common methods include: | |||

* '''Pruning''': This technique involves removing less significant weights or neurons from the neural network, resulting in a sparser model that requires less computation and storage. For instance, a study proposed a framework where the global model is pruned on a powerful server before being distributed to clients, effectively reducing the computational load on resource-constrained edge devices.<sup>[1]</sup> | |||

* '''Quantization''': This method reduces the precision of the model's weights, such as converting 32-bit floating-point numbers to 8-bit integers, thereby decreasing the model size and accelerating computations. However, careful implementation is necessary to balance the trade-off between model size reduction and potential accuracy loss. | |||

* '''Knowledge Distillation''': In this approach, a large, complex model (teacher) is used to train a smaller, simpler model (student) by transferring knowledge, allowing the student model to achieve comparable performance with reduced resource requirements. This technique has been effectively applied in FL to accommodate the constraints of edge devices.<sup>[2]</sup> | |||

== 5.6.2 Efficient Communication Protocols == | |||

Efficient communication protocols are vital for mitigating the communication bottleneck in FL, as frequent transmission of model updates between clients and the central server can overwhelm limited network resources. Strategies to enhance communication efficiency include: | |||

* '''Update Sparsification''': This technique involves transmitting only the most significant updates or gradients, reducing the amount of data sent during each communication round. By focusing on the most impactful changes, update sparsification decreases communication overhead without substantially affecting model performance. | |||

* '''Compression Algorithms''': Applying data compression methods to model updates before transmission can significantly reduce the data size. For example, using techniques like Huffman coding or run-length encoding can compress the updates, leading to more efficient communication.<sup>[3]</sup> | |||

* '''Adaptive Communication Frequency''': Adjusting the frequency of communications based on the training progress or model convergence can help in conserving bandwidth. For instance, clients may perform multiple local training iterations before sending updates to the server, thereby reducing the number of communication rounds required. | |||

== 5.6.3 Adaptive Client Selection Methods == | |||

Adaptive client selection methods focus on optimizing the selection of participating clients during each training round to enhance resource utilization and overall model performance. Approaches include: | |||

* '''Resource-Aware Selection''': Prioritizing clients with higher computational capabilities and better network connectivity can lead to more efficient training processes. By assessing the resource availability of clients, the FL system can make informed decisions on which clients to involve in each round.<sup>[4]</sup> | |||

* '''Clustered Federated Learning''': Grouping clients based on similarities in data distribution or system characteristics allows for more efficient training. Clients within the same cluster can collaboratively train a sub-model, which is then aggregated to form the global model, reducing the overall communication and computation burden.<sup>[5]</sup> | |||

* '''Early Stopping Strategies''': Implementing mechanisms to terminate training early when certain criteria are met can conserve resources. For example, if a client's local model reaches a predefined accuracy threshold, it can stop training and send the update to the server, thereby saving computational resources.<sup>[6]</sup> | |||

Incorporating these strategies into the FL framework enables more efficient utilization of the limited resources available on edge devices. By tailoring model training processes to the specific constraints of these devices, it is possible to achieve effective and scalable FL deployments in edge computing environments. | |||

= 5.7 Real-World Use Cases = | |||

=== 5.7.1 Healthcare === | |||

Healthcare is one of the most impactful and widely studied application domains for Federated Learning (FL), particularly due to its stringent privacy requirements and highly sensitive patient data. Traditional machine learning models typically require centralizing large amounts of medical data—ranging from diagnostic images and electronic health records (EHRs) to genomic sequences—posing serious risks under regulations like HIPAA and GDPR. FL addresses this challenge by enabling multiple hospitals, clinics, or wearable devices to collaboratively train models while keeping all patient data on-site<sup>[1][3]</sup>. | |||

One notable example is the application of FL to train predictive models for disease diagnosis using MRI scans or histopathology images across multiple hospitals. In these setups, each institution trains a model locally using its patient data and only shares encrypted or aggregated model updates with a central aggregator. This approach has been successfully used in training FL-based models for COVID-19 detection, brain tumor segmentation, and diabetic retinopathy classification<sup>[1][2]</sup>. The benefit lies in improved model generalization due to access to diverse and heterogeneous datasets, without the legal and ethical complications of data sharing. | |||

In addition to institutional collaboration, FL is also used in consumer health scenarios such as smart wearables. Devices like smartwatches and fitness trackers continuously collect user health data (e.g., heart rate, blood pressure, activity logs) that can be used to train personalized health monitoring systems. FL allows these models to be trained locally on-device, thereby reducing cloud dependency and latency while preserving individual privacy<sup>[3]</sup>. | |||

Challenges in this domain include handling non-IID data distributions across institutions, varied device capabilities, and communication constraints. Techniques like personalization layers, hierarchical FL, and secure aggregation protocols are often integrated into healthcare FL deployments to overcome these issues<sup>[4][5]</sup>. As the need for predictive healthcare analytics grows, FL is becoming foundational to building AI systems that are not only accurate but also ethically and legally compliant in multi-party medical environments. | |||

=== 5.7.2 Smart Cities === | |||

Smart cities rely on a vast network of distributed sensors, devices, and systems that continuously generate massive amounts of data ranging from traffic patterns and air quality metrics to public safety footage and energy usage statistics. Federated Learning (FL) offers a privacy-preserving and bandwidth-efficient approach to harness this decentralized data without centralizing it on cloud servers, making it especially suitable for urban-scale intelligence systems<sup>[1][4]</sup>. | |||

One of the core applications of FL in smart cities is intelligent traffic management. Edge devices such as surveillance cameras, traffic lights, and vehicle sensors can collaboratively train machine learning models to predict congestion, optimize signal timing, and detect traffic violations in real time. Each node performs local model training based on its location-specific data and shares only the model updates—not the raw video feeds or sensor data—thereby preserving commuter privacy and minimizing network load<sup>[2]</sup>. Cities like Hangzhou in China and certain municipalities in Europe have begun exploring such FL-based traffic optimization systems to handle increasing urban mobility challenges. | |||

Another promising use case is smart energy management. Federated models can be trained across household smart meters and utility grid edge nodes to forecast energy consumption patterns, detect anomalies, and adjust power distribution dynamically. This ensures data privacy for residents while enhancing grid efficiency and sustainability. Moreover, FL supports demand-response systems by learning user behavior at the edge and coordinating energy usage without exposing individual profiles<sup>[3][5]</sup>. | |||

FL also finds use in smart surveillance and public safety applications. For instance, edge cameras and sensors in public spaces can collaboratively learn suspicious activity patterns or detect emergencies without sending identifiable footage to centralized servers. Privacy is further enforced using secure aggregation and differential privacy mechanisms<sup>[2][4]</sup>. | |||

Despite its advantages, FL in smart cities faces challenges such as unreliable connectivity between edge nodes, heterogeneous device capabilities, and adversarial threats. To mitigate these, researchers are applying hierarchical FL architectures, robust aggregation schemes, and client selection techniques tailored to the dynamic topology of urban networks<sup>[4]</sup>. As cities become increasingly digitized, FL emerges as a key enabler of real-time, privacy-preserving, and distributed AI for sustainable urban development. | |||

=== 5.7.3 Autonomous Vehicles === | |||

Autonomous vehicles (AVs), including self-driving cars and drones, generate enormous volumes of sensor data in real time—ranging from camera feeds and LiDAR scans to GPS coordinates and driver behavior logs. Federated Learning (FL) offers a compelling framework for training collaborative AI models across fleets of vehicles without the need to transmit this raw, privacy-sensitive data to the cloud. Instead, each vehicle trains a local model and only communicates encrypted or compressed model updates to a central or distributed aggregator<sup>[1][3]</sup>. | |||

A major application of FL in AVs is in the area of *environmental perception* and *object detection*. Vehicles encounter different driving conditions across cities, weather patterns, and traffic densities. Using FL, vehicles can share what they learn about rare or localized road events—such as construction zones or unusual signage—without disclosing personally identifiable sensor data. This helps build more generalized and robust global models for autonomous navigation<sup>[2]</sup>. Companies like Tesla and Toyota Research Institute have explored federated or decentralized learning systems for AVs, aiming to continuously improve on-board models without compromising customer privacy. | |||

FL is also leveraged for *driver behavior modeling* in semi-autonomous vehicles. By locally analyzing acceleration patterns, braking habits, and reaction times, vehicles can personalize safety recommendations or driving assistance features. When aggregated via FL, this data helps improve shared predictive models for accident avoidance or route optimization across entire fleets<sup>[4]</sup>. | |||

However, the edge environments of AVs present unique challenges. Vehicles often operate with intermittent connectivity, variable compute resources, and high mobility. To address this, researchers have proposed *asynchronous FL protocols* and *hierarchical aggregation* where vehicles communicate with nearby edge nodes or road-side units (RSUs) that serve as intermediaries for model updates<sup>[5]</sup>. Additionally, ensuring security against *model poisoning attacks* is critical in vehicular networks. Robust aggregation and anomaly detection mechanisms are used to validate updates from potentially compromised vehicles<sup>[3][4]</sup>. | |||

As AV ecosystems scale, FL is emerging as a key architecture for building secure, adaptive, and collaborative intelligence among connected vehicles—paving the way for safer roads and real-time cooperative decision-making. | |||

=== 5.7.4 Finance === | |||

The financial industry handles highly sensitive data including transaction histories, credit scores, customer profiles, and fraud patterns—making privacy, security, and regulatory compliance central concerns. Federated Learning (FL) offers a transformative solution by enabling multiple financial institutions, mobile banking apps, and edge payment systems to collaboratively train machine learning models without exposing raw data to third parties. This helps meet stringent legal requirements such as GDPR, PCI-DSS, and national data localization laws while unlocking the power of cross-institutional intelligence<sup>[1][2]</sup>. | |||

A primary use case in finance is *fraud detection*. Banks and payment processors continuously monitor transaction streams to detect anomalies indicative of fraud or money laundering. Using FL, multiple banks can train a shared fraud detection model by learning from their own customer behaviors locally and contributing encrypted updates to a global model. This collaborative approach leads to more accurate and timely fraud detection without disclosing customer data across organizational boundaries<sup>[2][4]</sup>. Moreover, FL allows for faster adaptation to emerging threats by integrating insights from diverse geographies and transaction patterns. | |||

Another important application is *credit scoring and risk assessment*. Traditional credit scoring models often rely on centralized bureaus, which can be limited in scope and biased by incomplete data. FL enables lenders, fintech platforms, and credit unions to collectively build more representative models by incorporating local, decentralized data such as mobile usage behavior, alternative credit signals, or small business activity—all while preserving customer privacy<sup>[3][5]</sup>. | |||

FL also plays a role in *personalized financial services*. Mobile banking apps and robo-advisors can fine-tune investment recommendations or budgeting tools based on individual behavior, device-resident models, and FL-based collaboration across user groups. This enables banks to offer adaptive, privacy-preserving services without continuous cloud access<sup>[4]</sup>. | |||

Challenges in this domain include heterogeneity in financial institutions’ infrastructure, non-IID data across customer segments, and real-time latency requirements for high-frequency trading environments. Secure Aggregation, Differential Privacy, and adaptive client selection techniques are commonly used to balance accuracy, security, and compliance<sup>[1][3]</sup>. As the financial sector continues to embrace digital transformation, FL is becoming a cornerstone for secure, personalized, and collaborative AI in banking and financial technology. | |||

=== 5.7.5 Industrial IoT === | |||

Industrial IoT (IIoT) environments—such as smart manufacturing plants, oil refineries, power grids, and logistics hubs—generate massive volumes of operational data from distributed sensors, controllers, and machines. Federated Learning (FL) enables these IIoT systems to collaboratively train models across different factories, production lines, or machine clusters without transmitting raw industrial data to centralized servers. This is particularly important for preserving proprietary process information, complying with regulatory frameworks, and ensuring low-latency processing at the edge<sup>[1][3]</sup>. | |||

One core use case is *predictive maintenance*, where FL allows edge devices embedded in machines (e.g., motors, turbines, robots) to learn failure patterns from local sensor readings like vibration, temperature, or acoustic signals. These localized models are periodically aggregated to improve a shared global model capable of predicting failures across different machinery types or operational settings. This improves equipment uptime and safety while avoiding central storage of confidential operational data<sup>[2][4]</sup>. | |||

FL is also used in *quality control and defect detection*. Visual inspection systems in different factories can collaborate by training computer vision models that detect defects in manufacturing processes. FL allows knowledge sharing across factories that may use similar processes or materials, while respecting the industrial secrecy of each plant. By preserving data locality, FL helps manufacturers share model improvements without risking intellectual property exposure<sup>[3]</sup>. | |||

In supply chain monitoring, FL enables logistics nodes, warehouses, and distribution centers to coordinate inventory forecasting and optimize routing models based on local data. These systems benefit from FL’s ability to respect competitive boundaries among vendors while improving the efficiency of global supply chains<sup>[4][5]</sup>. | |||

Challenges in IIoT-based FL include highly non-IID data due to variation in production processes, limited compute resources on industrial edge devices, and communication constraints in isolated environments. To mitigate these, IIoT deployments often leverage hierarchical FL (e.g., plant-level edge servers aggregating from device clusters) and lightweight model architectures designed for embedded processors<sup>[1][2]</sup>. As industrial systems become increasingly connected, FL is emerging as a key enabler of secure, decentralized, and intelligent automation across critical infrastructure and smart manufacturing. | |||

= 5.8 Open Challenges = | |||

Federated Learning (FL) helps protect user data by training models directly on devices. While useful, FL faces many challenges that make it hard to use in real-world systems.<sup>[1]</sup><sup>[2]</sup><sup>[3]</sup><sup>[4]</sup><sup>[5]</sup><sup>[6]</sup> | |||

* '''System heterogeneity''': Devices used in FL—like smartphones, sensors, or edge servers—have different speeds, memory, and battery life. Some may be too slow or lose power during training, causing delays. Solutions include using smaller models, pruning, partial updates, and early stopping.<sup>[1]</sup><sup>[6]</sup> | |||

* '''Communication bottlenecks''': FL requires frequent communication between devices and a central server. Sending full model updates can overwhelm slow or unstable networks. To address this, update compression methods like quantization, sparsification, and knowledge distillation are used.<sup>[2]</sup><sup>[3]</sup> | |||

* '''Statistical heterogeneity (Non-IID data)''': Each device collects different data based on user behavior and environment, leading to non-IID distributions. This can reduce global model accuracy. Solutions include clustered FL, personalized models, and meta-learning approaches.<sup>[2]</sup><sup>[5]</sup> | |||

* '''Privacy and security''': Even if raw data stays on devices, model updates can leak sensitive information. Malicious clients may poison the model. Mitigation strategies include secure aggregation, differential privacy, and homomorphic encryption, though these may add computational and communication overhead.<sup>[2]</sup><sup>[3]</sup> | |||

Scalability | * '''Scalability''': FL must support thousands or millions of clients—many of which may drop out or go offline. Techniques like reliable client selection and hierarchical FL (with edge aggregators) improve scalability.<sup>[4]</sup> | ||

* '''Incentive mechanisms''': Clients may not participate without benefits, especially if training drains battery or bandwidth. Solutions like blockchain-based tokens, credit systems, or reputation scores are being explored, but adoption remains low.<sup>[2]</sup> | |||

* '''Lack of standardization and benchmarks''': FL lacks widely accepted benchmarks and datasets. Simulations often ignore real-world issues like device failure, non-stationarity, or network variability. Frameworks like LEAF, FedML, and Flower offer partial solutions, but more real-world testing is needed.<sup>[2]</sup><sup>[3]</sup> | |||

* '''Concept drift and continual learning''': User data changes over time, which can make models outdated. Continual learning techniques like memory replay, adaptive learning rates, and early stopping help models stay relevant.<sup>[1]</sup><sup>[6]</sup> | |||

* '''Deployment complexity''': Devices run different operating systems, have different hardware specs, and operate on varying network conditions. Managing model updates and ensuring consistent performance is difficult across such diverse platforms. | |||

* '''Reliability and fault tolerance''': Devices may crash, lose connection, or send corrupted updates. FL systems must be resilient and able to recover from partial failures without degrading the global model. | |||

* '''Monitoring and debugging''': In FL, training occurs across thousands of devices, making it hard to monitor model behavior or identify bugs. New tools are needed to improve observability and debugging in distributed systems. | |||

= | = 5.9 References = | ||

# | # ''Efficient Pruning Strategies for Federated Edge Devices''. Springer Journal, 2023. https://link.springer.com/article/10.1007/s40747-023-01120-5 | ||

# ''Federated Learning with Knowledge Distillation''. arXiv preprint, 2020. https://arxiv.org/abs/2007.14513 | |||

# ''Communication-Efficient FL using Data Compression''. MDPI Applied Sciences, 2022. https://www.mdpi.com/2076-3417/12/18/9124 | |||

# ''Resource-Aware Federated Client Selection''. arXiv preprint, 2021. https://arxiv.org/pdf/2111.01108 | |||

# ''Clustered Federated Learning for Heterogeneous Clients''. MDPI Telecom, 2023. https://www.mdpi.com/2673-2688/6/2/30 | |||

# ''Early Stopping in FL for Energy-Constrained Devices''. arXiv preprint, 2023. https://arxiv.org/abs/2310.09789 | |||

Latest revision as of 03:40, 7 April 2025

5.1 Overview and Motivation[edit]

Federated Learning (FL) is a decentralized approach to machine learning where many edge devices—called clients—work together to train a shared model without sending their private data to a central server. Each client trains the model locally using its own data and then sends only the model updates (like gradients or weights) to a central server, often called an aggregator. The server combines these updates to create a new global model, which is then sent back to the clients for the next round of training. This cycle repeats, allowing the model to improve while keeping the raw data on the devices. FL shifts the focus from central data collection to collaborative training, supporting privacy and scalability in machine learning systems.[1]

The main reason for using FL is to address concerns about data privacy, security, and communication efficiency—especially in edge computing, where huge amounts of data are created across many different, often limited, devices. Centralized learning struggles here due to limited bandwidth, high data transfer costs, and strict privacy regulations like the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA). FL helps solve these problems by keeping data on the device, reducing the risk of leaks and the need for constant cloud connectivity. It also lowers communication costs by sharing small model updates instead of full datasets, making it ideal for real-time learning in mobile and edge networks.[2]

In edge computing, FL is a major shift in how we do machine learning. It supports distributed intelligence even when devices have limited resources, unreliable connections, or very different types of data (non-IID). Clients can join training sessions at different times, work around network delays, and adjust based on their hardware limitations. This makes FL a flexible option for edge environments with varying battery levels, processing power, and storage. FL can also support personalized models using techniques like federated personalization or clustered aggregation. Overall, it provides a strong foundation for building AI systems that are scalable, private, and better suited for the challenges of modern distributed computing.[1][2][3]

5.2 Federated Learning Architectures[edit]

Federated Learning (FL) can be implemented through various architectural configurations, each defining how clients interact, how updates are aggregated, and how trust and responsibility are distributed. These architectures play a central role in determining the scalability, fault tolerance, communication overhead, and privacy guarantees of a federated system. In edge computing environments, where client devices are heterogeneous and network reliability varies, the choice of architecture significantly affects the efficiency and robustness of learning. The three dominant paradigms are centralized, decentralized, and hierarchical architectures. Each of these approaches balances different trade-offs in terms of coordination complexity, system resilience, and resource allocation.

5.2.1 Centralized Architecture[edit]

In the centralized FL architecture, a central server or cloud orchestrator is responsible for all coordination, aggregation, and distribution activities. The server begins each round by broadcasting a global model to a selected subset of client devices, which then perform local training using their private data. After completing local updates, clients send their modified model parameters—usually in the form of weight vectors or gradients—back to the server. The server performs aggregation, typically using algorithms such as Federated Averaging (FedAvg), and sends the updated global model to the clients for the next round of training.

The centralized model is appealing for its simplicity and compatibility with existing cloud-to-client infrastructures. It is relatively easy to deploy, manage, and scale in environments with stable connectivity and limited client churn. However, its reliance on a single server introduces critical vulnerabilities. The server becomes a bottleneck under high communication loads and a single point of failure if it experiences downtime or compromise. Furthermore, this architecture requires clients to trust the central aggregator with metadata, model parameters, and access scheduling. In privacy-sensitive or high-availability contexts, these limitations can restrict centralized FL’s applicability.[1]

5.2.2 Decentralized Architecture[edit]

Decentralized FL removes the need for a central server altogether. Instead, client devices interact directly with each other to share and aggregate model updates. These peer-to-peer (P2P) networks may operate using structured overlays, such as ring topologies or blockchain systems, or employ gossip-based protocols for stochastic update dissemination. In some implementations, clients collaboratively compute weighted averages or perform federated consensus to update the global model in a distributed fashion.

This architecture significantly enhances system robustness, resilience, and trust decentralization. There is no single point of failure, and the absence of a central coordinator eliminates risks of aggregator bias or compromise. Moreover, decentralized FL supports federated learning in contexts where participants belong to different organizations or jurisdictions and cannot rely on a neutral third party. However, these benefits come at the cost of increased communication overhead, complex synchronization requirements, and difficulties in managing convergence—especially under non-identical data distributions and asynchronous updates. Protocols for secure communication, update verification, and identity authentication are necessary to prevent malicious behavior and ensure model integrity. Due to these complexities, decentralized FL is an active area of research and is best suited for scenarios requiring strong autonomy and fault tolerance.[2]

5.2.3 Hierarchical Architecture[edit]

Hierarchical FL is a hybrid architecture that introduces one or more intermediary layers—often called edge servers or aggregators—between clients and the global coordinator. In this model, clients are organized into logical or geographical groups, with each group connected to an edge server. Clients send their local model updates to their respective edge aggregator, which performs preliminary aggregation. The edge servers then send their aggregated results to the cloud server, where final aggregation occurs to produce the updated global model.

This multi-tiered architecture is designed to address the scalability and efficiency challenges inherent in centralized systems while avoiding the coordination overhead of full decentralization. Hierarchical FL is especially well-suited for edge computing environments where data, clients, and compute resources are distributed across structured clusters, such as hospitals within a healthcare network or base stations in a telecommunications infrastructure.

One of the key advantages of hierarchical FL is communication optimization. By aggregating locally at edge nodes, the amount of data transmitted over wide-area networks is significantly reduced. Additionally, this model supports region-specific model personalization by allowing edge servers to maintain specialized sub-models adapted to local client behavior. Hierarchical FL also enables asynchronous and fault-tolerant training by isolating disruptions within specific clusters. However, this architecture still depends on reliable edge aggregators and introduces new challenges in cross-layer consistency, scheduling, and privacy preservation across multiple tiers.[1][3]

5.3 Aggregation Algorithms and Communication Efficiency[edit]

Aggregation is a fundamental operation in Federated Learning (FL), where updates from multiple edge clients are merged to form a new global model. The quality, stability, and efficiency of the FL process depend heavily on the aggregation strategy employed. In edge environments characterized by device heterogeneity and non-identical data distributions, choosing the right aggregation algorithm is essential to ensure reliable convergence and effective collaboration.

5.3.1 Key Aggregation Algorithms[edit]

| Algorithm | Description | Handles Non-IID Data | Server-Side Optimization | Typical Use Case |

|---|---|---|---|---|

| FedAvg | Performs weighted averaging of client models based on dataset size. Simple and communication-efficient. | Limited | No | Basic federated setups with IID or mildly non-IID data. |

| FedProx | Adds a proximal term to the local loss function to prevent client drift. Stabilizes training with diverse data. | Yes | No | Suitable for edge deployments with high data heterogeneity or resource-limited clients. |

| FedOpt | Applies adaptive optimizers (e.g., FedAdam, FedYogi) on aggregated updates. Enhances convergence in dynamic systems. | Yes | Yes | Used in large-scale systems or settings with unstable participation and gradient variability. |

Aggregation is the cornerstone of FL, where locally computed model updates from edge devices are combined into a global model. The most widely adopted aggregation method is Federated Averaging (FedAvg), introduced in the foundational work by McMahan et al.[1] FedAvg operates by averaging model parameters received from participating clients, typically weighted by the size of each client’s local dataset. This simple yet powerful method reduces the frequency of communication by allowing each device to perform multiple local updates before sending gradients to the server. However, FedAvg performs optimally only when data across clients is balanced and independent and identically distributed (IID)—conditions rarely satisfied in edge computing environments, where client datasets are often highly non-IID, sparse, or skewed.[2]

To address these limitations, several advanced aggregation algorithms have been proposed. One notable extension is FedProx, which modifies the local optimization objective by adding a proximal term that penalizes large deviations from the global model. This constrains local training and improves stability in heterogeneous data scenarios. FedProx also allows flexible participation by clients with limited resources or intermittent connectivity, making it more robust in practical edge deployments.

Another family of aggregation algorithms is FedOpt, which includes adaptive server-side optimization techniques such as FedAdam and FedYogi. These algorithms build on optimization methods used in centralized training and apply them at the aggregation level, enabling faster convergence and improved generalization under complex, real-world data distributions. Collectively, these variants of aggregation address critical FL challenges such as slow convergence, client drift, and update divergence due to heterogeneity in both data and device capabilities.[1][3]

5.3.2 Communication Efficiency in Edge-Based FL[edit]

Communication remains one of the most critical bottlenecks in deploying FL at the edge, where devices often suffer from limited bandwidth, intermittent connectivity, and energy constraints. To address this, several strategies have been developed:

- Gradient quantization: Reduces the size of transmitted updates by lowering numerical precision (e.g., from 32-bit to 8-bit values).

- Gradient sparsification: Limits communication to only the most significant changes in the model, transmitting top-k updates while discarding negligible ones.

- Local update batching: Allows devices to perform multiple rounds of local training before sending updates, reducing the frequency of synchronization.

Further, client selection strategies dynamically choose a subset of devices to participate in each round, based on criteria like availability, data quality, hardware capacity, or trust level. These communication optimizations are crucial for ensuring that FL remains scalable, efficient, and deployable across millions of edge nodes without overloading the network or draining device batteries.[1][2][3]

5.4 Privacy Mechanisms[edit]

Privacy and data confidentiality are central design goals of Federated Learning (FL), particularly in edge computing scenarios where numerous IoT devices (e.g., hospital servers, autonomous vehicles) gather sensitive data. Although FL does not require the raw data to leave each client’s device, model updates can still leak private information or be correlated to individual data points. To address these challenges, various privacy-preserving mechanisms have been proposed in the literature.[1][2][3]

5.4.1 Differential Privacy (DP)[edit]

Differential Privacy (DP) is a formal framework ensuring that the model’s outputs (e.g., parameter updates) do not reveal individual records. In FL, DP often involves injecting calibrated noise into gradients or model weights on each client. This noise is designed so that the global model’s performance remains acceptable, yet attackers cannot reliably infer any single data sample’s presence in the training set.

A step-by-step timeline of DP in an FL context can be summarized as follows:

- Clients fetch the global model and compute local gradients.

- Before transmitting gradients, clients add randomized noise to mask specific data patterns.

- The central server aggregates the noisy gradients to produce a new global model.

- Clients download the updated global model for further local training.

By carefully tuning the “privacy budget” (ε and δ), DP can balance privacy against model utility.[1][4]

5.4.2 Secure Aggregation[edit]

Secure Aggregation, or SecAgg, is a protocol that encrypts local updates before they are sent to the server, ensuring that only the aggregated result is revealed. A typical SecAgg workflow includes:

- Each client randomly splits its model updates into multiple shares.

- These shares are exchanged among clients and the server in a way that no single party sees the entirety of any update.

- The server only obtains the sum of all client updates, rather than individual parameters.

This approach can thwart internal adversaries who might try to reconstruct local data from raw updates.[2] SecAgg is crucial for preserving confidentiality, especially in IoT-based FL systems where data privacy regulations (such as GDPR and HIPAA) prohibit raw data exposure.

5.4.3 Homomorphic Encryption and SMPC[edit]

Homomorphic Encryption (HE) supports computations on encrypted data without the need for decryption. In FL, a homomorphically encrypted gradient can be aggregated securely by the server, preventing it from seeing cleartext updates. This approach, however, introduces higher computational overhead, which can be burdensome for resource-limited IoT edge devices.[3]

Secure Multi-Party Computation (SMPC) is a related set of techniques that enables multiple parties to perform joint computations on secret inputs. In the context of FL, SMPC allows clients to compute sums of model updates without revealing individual updates. Although performance optimizations exist, SMPC remains challenging for large-scale models with millions of parameters.[1][5]

5.4.4 IoT-Specific Considerations[edit]

In edge computing, IoT devices often capture highly sensitive data (patient records, vehicle sensor logs, etc.). Privacy measures must therefore operate seamlessly on low-power hardware while accommodating intermittent connectivity. For instance, a smart healthcare device storing patient records may use DP-based local training and SecAgg to encrypt updates before uploading. Meanwhile, an autonomous vehicle might adopt HE to guard sensor patterns relevant to real-time traffic analysis.

Together, these techniques form a multi-layered privacy defense tailored for distributed, resource-constrained IoT ecosystems.[4][5]

Adapted from Privacy-Preserving Federated Learning Using Homomorphic Encryption.

5.5 Security Threats[edit]

While Federated Learning (FL) enhances data privacy by ensuring that raw data remains on edge devices, it introduces significant security vulnerabilities due to its decentralized design and reliance on untrusted participants. In edge computing environments, where clients often operate with limited computational power and over unreliable networks, these threats are particularly pronounced.

5.5.1 Model Poisoning Attacks[edit]

Model poisoning attacks are a critical threat in FL, especially in edge computing environments where the infrastructure is distributed and clients may be untrusted or loosely regulated. In this type of attack, malicious clients intentionally craft and submit harmful model updates during the training process to compromise the performance or integrity of the global model. These attacks are typically categorized as either untargeted—aimed at degrading general model accuracy—or targeted (backdoor attacks), where the global model is manipulated to behave incorrectly in specific scenarios while appearing normal in others. For instance, an attacker might train its local model with a backdoor trigger, such as a specific pixel pattern in an image, so that the global model misclassifies inputs containing that pattern, even though it performs well on standard test cases.[1][4]

FL's reliance on aggregation algorithms like Federated Averaging (FedAvg), which simply compute the average of local updates, makes it susceptible to these attacks. Since raw data is never shared, poisoned updates can be hard to detect—especially in non-IID settings where variability is expected. Robust aggregation techniques like Krum, Trimmed Mean, and Bulyan have been proposed to resist such manipulation by filtering or down-weighting outlier contributions. However, these algorithms often introduce computational and communication overheads that are impractical for edge devices with limited power and processing capabilities.[2][4] Furthermore, adversaries can design subtle attacks that mimic benign statistical patterns, making them indistinguishable from legitimate updates. Emerging research explores anomaly detection based on update similarity and trust scoring, yet these solutions face limitations when applied to large-scale or asynchronous FL deployments. Developing lightweight, real-time, and scalable defenses that remain effective under device heterogeneity and unreliable network conditions remains an unresolved challenge.[3][4]

5.5.2 Data Poisoning Attacks[edit]

Data poisoning attacks target the integrity of FL by manipulating the training data on individual clients before model updates are generated. Unlike model poisoning, which corrupts the gradients or weights directly, data poisoning occurs at the dataset level—allowing adversaries to stealthily influence model behavior through biased or malicious data. This includes techniques such as label flipping, outlier injection, or clean-label attacks that subtly alter data without visible artifacts. Since FL assumes client data remains private and uninspected, such poisoned data can easily propagate harmful patterns into the global model, especially in non-IID edge scenarios.[2][3]

Edge devices are especially vulnerable to this form of attack due to their limited compute and energy resources, which often preclude comprehensive input validation. The highly diverse and fragmented nature of edge data—like medical readings or driving behavior—makes it difficult to establish a clear baseline for anomaly detection. Defense strategies include robust aggregation (e.g., Median, Trimmed Mean), anomaly detection, and Differential Privacy (DP). However, these methods can reduce model accuracy or increase complexity.[1][4] There is currently no foolproof solution to detect data poisoning without violating privacy principles. As FL continues to be deployed in critical domains, mitigating these attacks while preserving user data locality and system scalability remains an open and urgent research challenge.[3][4]

5.5.3 Inference and Membership Attacks[edit]

Inference attacks represent a subtle yet powerful class of threats in FL, where adversaries seek to extract sensitive information from shared model updates rather than raw data. These attacks exploit the iterative nature of FL training. By analyzing updates—especially in over-parameterized models—attackers can infer properties of the data or even reconstruct inputs. A key example is the membership inference attack, where an adversary determines if a specific data point was used in training. This becomes more effective in edge scenarios, where updates often correlate strongly with individual devices.[2][3]

As model complexity increases, so does the risk of gradient-based information leakage. Small datasets on edge devices amplify this vulnerability. Attackers with access to multiple rounds of updates may perform gradient inversion to reconstruct training inputs. These risks are especially serious in sensitive fields like healthcare. Mitigations include Differential Privacy and Secure Aggregation, but both reduce accuracy or add system overhead.[4] Designing FL systems that preserve utility while protecting against inference remains a major ongoing challenge.[1][4]

5.5.4 Sybil and Free-Rider Attacks[edit]

Sybil attacks occur when a single adversary creates multiple fake clients (Sybil nodes) to manipulate the FL process. These clients may collude to skew aggregation results or overwhelm honest participants. This is especially dangerous in decentralized or large-scale FL environments, where authentication is weak or absent.[1] Without strong identity verification, an attacker can inject numerous poisoned updates, degrading model accuracy or blocking convergence entirely.

Traditional defenses like IP throttling or user registration may violate privacy principles or be infeasible at the edge. Cryptographic registration, proof-of-work, or client reputation scoring have been explored, but each has trade-offs. Clustering and anomaly detection can identify Sybil patterns, though adversaries may adapt their behavior to avoid detection.[4]

Free-rider attacks involve clients that participate in training but contribute little or nothing—e.g., sending stale models or dummy gradients—while still downloading and benefiting from the global model. This undermines fairness and wastes resources, especially on networks where honest clients use real bandwidth and energy.[3] Mitigation strategies include contribution-aware aggregation and client auditing.

5.5.5 Malicious Server Attacks[edit]

In classical centralized FL, the server acts as coordinator—receiving updates and distributing models. However, if compromised, the server becomes a major threat. It can perform inference attacks, drop honest client updates, tamper with model weights, or inject adversarial logic. This poses significant risk in domains like healthcare and autonomous systems.[1][3]

Secure Aggregation protects client updates by encrypting them, ensuring only aggregate values are visible. Homomorphic Encryption allows encrypted computation, while SMPC enables privacy-preserving joint computation. However, all three approaches involve high computational or communication costs.[4] Decentralized or hierarchical architectures reduce single-point-of-failure risk, but introduce new challenges around trust coordination and efficiency.[2][4]

5.6 Resource-Efficient Model Training[edit]

In Federated Learning (FL), especially within edge computing environments, resource-efficient model training is crucial due to the inherent limitations of edge devices, such as constrained computational power, limited memory, and restricted communication bandwidth. Addressing these challenges involves implementing strategies that optimize the use of local resources while maintaining the integrity and performance of the global model. Key approaches include model compression techniques, efficient communication protocols, and adaptive client selection methods.

5.6.1 Model Compression Techniques[edit]

Model compression techniques are essential for reducing the computational and storage demands of FL models on edge devices. By decreasing the model size, these techniques facilitate more efficient local training and minimize the communication overhead during model updates. Common methods include:

- Pruning: This technique involves removing less significant weights or neurons from the neural network, resulting in a sparser model that requires less computation and storage. For instance, a study proposed a framework where the global model is pruned on a powerful server before being distributed to clients, effectively reducing the computational load on resource-constrained edge devices.[1]

- Quantization: This method reduces the precision of the model's weights, such as converting 32-bit floating-point numbers to 8-bit integers, thereby decreasing the model size and accelerating computations. However, careful implementation is necessary to balance the trade-off between model size reduction and potential accuracy loss.

- Knowledge Distillation: In this approach, a large, complex model (teacher) is used to train a smaller, simpler model (student) by transferring knowledge, allowing the student model to achieve comparable performance with reduced resource requirements. This technique has been effectively applied in FL to accommodate the constraints of edge devices.[2]

5.6.2 Efficient Communication Protocols[edit]

Efficient communication protocols are vital for mitigating the communication bottleneck in FL, as frequent transmission of model updates between clients and the central server can overwhelm limited network resources. Strategies to enhance communication efficiency include:

- Update Sparsification: This technique involves transmitting only the most significant updates or gradients, reducing the amount of data sent during each communication round. By focusing on the most impactful changes, update sparsification decreases communication overhead without substantially affecting model performance.

- Compression Algorithms: Applying data compression methods to model updates before transmission can significantly reduce the data size. For example, using techniques like Huffman coding or run-length encoding can compress the updates, leading to more efficient communication.[3]

- Adaptive Communication Frequency: Adjusting the frequency of communications based on the training progress or model convergence can help in conserving bandwidth. For instance, clients may perform multiple local training iterations before sending updates to the server, thereby reducing the number of communication rounds required.

5.6.3 Adaptive Client Selection Methods[edit]

Adaptive client selection methods focus on optimizing the selection of participating clients during each training round to enhance resource utilization and overall model performance. Approaches include:

- Resource-Aware Selection: Prioritizing clients with higher computational capabilities and better network connectivity can lead to more efficient training processes. By assessing the resource availability of clients, the FL system can make informed decisions on which clients to involve in each round.[4]

- Clustered Federated Learning: Grouping clients based on similarities in data distribution or system characteristics allows for more efficient training. Clients within the same cluster can collaboratively train a sub-model, which is then aggregated to form the global model, reducing the overall communication and computation burden.[5]

- Early Stopping Strategies: Implementing mechanisms to terminate training early when certain criteria are met can conserve resources. For example, if a client's local model reaches a predefined accuracy threshold, it can stop training and send the update to the server, thereby saving computational resources.[6]

Incorporating these strategies into the FL framework enables more efficient utilization of the limited resources available on edge devices. By tailoring model training processes to the specific constraints of these devices, it is possible to achieve effective and scalable FL deployments in edge computing environments.

5.7 Real-World Use Cases[edit]

5.7.1 Healthcare[edit]

Healthcare is one of the most impactful and widely studied application domains for Federated Learning (FL), particularly due to its stringent privacy requirements and highly sensitive patient data. Traditional machine learning models typically require centralizing large amounts of medical data—ranging from diagnostic images and electronic health records (EHRs) to genomic sequences—posing serious risks under regulations like HIPAA and GDPR. FL addresses this challenge by enabling multiple hospitals, clinics, or wearable devices to collaboratively train models while keeping all patient data on-site[1][3].

One notable example is the application of FL to train predictive models for disease diagnosis using MRI scans or histopathology images across multiple hospitals. In these setups, each institution trains a model locally using its patient data and only shares encrypted or aggregated model updates with a central aggregator. This approach has been successfully used in training FL-based models for COVID-19 detection, brain tumor segmentation, and diabetic retinopathy classification[1][2]. The benefit lies in improved model generalization due to access to diverse and heterogeneous datasets, without the legal and ethical complications of data sharing.

In addition to institutional collaboration, FL is also used in consumer health scenarios such as smart wearables. Devices like smartwatches and fitness trackers continuously collect user health data (e.g., heart rate, blood pressure, activity logs) that can be used to train personalized health monitoring systems. FL allows these models to be trained locally on-device, thereby reducing cloud dependency and latency while preserving individual privacy[3].

Challenges in this domain include handling non-IID data distributions across institutions, varied device capabilities, and communication constraints. Techniques like personalization layers, hierarchical FL, and secure aggregation protocols are often integrated into healthcare FL deployments to overcome these issues[4][5]. As the need for predictive healthcare analytics grows, FL is becoming foundational to building AI systems that are not only accurate but also ethically and legally compliant in multi-party medical environments.

5.7.2 Smart Cities[edit]